Featured Post

Hacking Health in Hamilton Ontario - Let's hear that pitch!

What compelled me to register for a weekend Health Hackathon? Anyway, I could soon be up to my ears in it. A pubmed search on Health Hack...

Showing posts with label artificial intelligence. Show all posts

Showing posts with label artificial intelligence. Show all posts

Friday, May 3, 2019

Saturday, June 30, 2018

Revolutionizing healthcare - reposted from Peter Diamandis

|

Wednesday, May 30, 2018

eHealth forever or technology forever?

I have been writing this "ehealth enabled browser" blog on eHealth since after I graduated with a M.Sc. in eHealth in 2012. I will probably be spending a lot less time blogging here. I still enjoy following the various topics and points of interest that I have encountered in digital health. Recently one of the great health informatics bloggers, Dr. John Halamka - the Geek Doctor - has decided to wind down his blog. Looks like he is taking more to the twitter sphere. I highly recommend you check that out if you are interested in Health Informatics (or life in general).

A little while ago something I read inspired me to think about writing the ebook version of the "ehealth enabled browser" that I have run here on the earthspiritendless URL at blogspot.ca Turns out I may have received more inspiration than the perspiration necessary to do that. For the time being, I will settle for writing this post. This will try to encapsulate what I think I have learned by following digital health during this blog experience. To start off - let me try to explain the significance of the title of this post - eHealth forever or technology forever?

I saw a TED talk where I heard that essentially "technology lives forever" (Kevin Kelly - How Technology Evolves). To illustrate this point the presenter used the example of a steel plow, the kind our ancestors not so long ago pulled behind horses or oxen. A schematic or blueprint of this technology would allow anyone with the technology to replicate it - in essence, bringing it back to life. The technology will last many hundreds of years and would still exist in some less than functional form even after the warranty expires. When it is totally broken, you create another one. Maybe the most difficult thing is just preserving the knowledge and information to manufacture it. Well, biological beings might appear to be in the same category - cloning DNA - but let's face it, we break down more permanently than the technology we have created. Which leads me to the URL name for this blog - earthspiritendless. The final word is going to be that none of this matters and that only the Earth abides. Nothing lasts forever.

I can't remember why I named this blog URL earthspiritendless when it was supposed to be about digital health and the study of health informatics. The title of the blog - "an ehealth enabled browser" - suggests a blog about someone "browsing" or reading about ehealth. The URL name actually comes from the English translation for a Tibetan name a Tibetan Rinpoche (reincarnated Lama) gave me in Bodhgaya, India. It is not a riff on the sports wear company that makes earthspirit brand running shoes. I always did have some fear that the company would track me down and accuse me of infringing on their brand or something. The fact of the matter is that there is no connection between ehealth and the Tibetan "nom de religion".

Since eHealth has a computer science focus there is always going to be an attraction to future technologies - for as we know - technology evolves. If you want to try to follow where computer technology is going in the future, there would be no better futurologist to consult than Ray Kurzweil, currently Chief Engineer at Google. It was by reading his books and starting this blog that I began to see a convergence in the ideas of transhumanism, the singularity, and health informatics - a future where we need to learn how about the role of the health care system along with life extension concepts and technologies. I also read his weekly Accelerating Intelligence reports on new discoveries in science and technology, and have a link to it on the blog.

In the field of eHealth itself one of my overall impressions is the continual need for research and systematic reviews on the efficacy of eHealth for improving health and quality of life, as well as a return on investment. The latter just means an improvement in the quality of life. This is where the great service of such academic venues as the Journal of Medical Internet Research is focused. If I was to go back 7 years with a serious intent to study eHealth - toward a PhD for example - I would be busy reading, saving and studying journal articles. eHealth is a business, computer science, and health science interdisciplinary work, and it is always important to keep that in mind when reading and assessing journal articles.

I suppose if I was to generalize about what I have reviewed in digital health into categories of most interest to me and this blog, I could come up with something like this:

A spiritual master was once asked what is man's greatest need and the answer was "having work to do". Sorry I don't have a reference for that or even if I have reworded that correctly, but it really means that we find no real meaning in life unless we do work. Health Informatics as an educational program is an applied field where internships are developed, so it is career oriented from the beginning. One reason I studied it was the possibility of making a career change into what I perceived was an exciting field that had many new developments on the horizon. This blog was never going to provide me with an income from the google ads ( I made enough to buy a few cups of coffee so thanks for those clicks!). I once thought of extending it as a possible business and I secured an URL called ehealthenabled.ca with the intent of developing a site/service for ways of empowering people to use ehealth technology.

That ehealthenabled.ca site didn't run for longer than a year, and I used it mostly to explore again web development in the suite of web hosting software one finds in Control Panel. I learned that WordPress is better than blogspot for creating content. My problem was that I didn't really know what kind of content to bring to market. I had a vague sort of idea that what we need for public and preventative health were ehealth technology "garages" in every neighbourhood. When cars were mechanically breaking down all the time, every neighbourhood had a repair garage - all gone now as pumping stations have consolidated and cars no longer break down as frequently. These self-service or consultant oriented ehealth stations would also have exercise equipment and all kinds of mobile ehealth technology available, including DIY ultrasound, tDCS etc - after working through the health, safety and privacy concerns of course. We know now how important exercise is for health and having access to resistance training equipment -and/or health coaches - is a fundamental health technology.

The other and perhaps most interesting aspect about an eHealth career is the current potential for entrepeneurship, start-ups, and innovation. eHealth is an applied field, an application of ideas and technologies to solve ever changing and challenging problems in healthcare. I have participated in several Health Hackathons and it would have been great to get involved in some of these types of activities a lot earlier. I would also like to turn the clock back a few more years so I wouldn't miss the mobile app programming bus! Knew that one was coming - did nothing much about it.

Ethics of Technology

Since I work professionally in an ethics related career ( university research ethics) I naturally have had an interest in technology and ethics. For many years I was more interested in bioethics generally and have some courses and conferences under my belt (including a conference presentation on RFID privacy and security concerns in Healthcare). In the past several years there has been a strong shift towards just focusing on the ethics of new technologies and I trace this back when Demi Hassabis sold his DeepMind artificial intelligence gaming software company to Google. Forming an ethics technology committee was a condition of the sale to Google. There is relevance to eHealth a lot here because at Google, Deep Mind went on to develop Alpha-Go the AI that defeated the best Go players in the world. Alpha-Go is also being used in Healthcare, much like IBM's Watson.

There is a really comprehensive research group that also has an open source journal called the Institute for Ethics and Emerging Technologies - https://ieet.org/. It is interesting to follow this group. I once tried to interest them in publishing an essay I wrote about Steve Mann but I ended up posting it on my Linkedin page - a version of it at least.

Life Extension

I think I only seriously became interested in how life extension related to eHealth by reading Ray Kurzweil. Medicine is more and more becoming an information science apparently. I think the corner was turned on that once medical reference libraries went digital. Living forever is a serious science fiction theme but if Ray is right and exponential changes is happening in computer power, discoveries in science are going to accelerate. The idea that we should be trying to stay healthy to live longer is not new, but the idea that we should seriously try to stay healthy in order to possibly benefit by new life extension technologies that will be available after the singularity - in 2030? - certainly is a new deck of cards. The movie Elysium, one of probably a thousand or so that explore life extension ideas in science fiction had a credible healthcare technology that could cure any disease.

Is this something I personally want and help strive to attain? Something like this is a foundational and massively transformational (thank you Peter Diamandis for that concept) movement and revolution in healthcare where the ethics of maintaining quality of life is so vital. What if we as individuals don't have a choice for how long we are going to live if even the dictates of healthcare ethics say we have to be preserved in some form of silicon based artificial intelligence while our biological DNA is being reprogrammed for cellular regeneration. Maybe it will just come down to a duty to care?

Another spiritual master was asked what was the secret to his longevity and health and he replied "Living off the interest of my investments". Sorry - no reference for that anywhere on the Internet at the moment. Maybe I heard that before the internet.

Personal Health Records - Toward the Quantified Self

The ehealth enabled blog explored a lot studies about personal health records. An aspect tangential to that is a concept called the "quantified self". Will collecting a lot of health data in a "do it yourself" sort of way help save us? I found it interesting to read about experiences with fitbit heart rate data, facebook posts on personal health issues, and other such patient lead data collecting activities, that have resulted in some life saving measures.

The really protracted issues that never seem to go away are the problems with data interoperability. It is hard to join an HL7 committee and help advance the work of interoperability (tried that). Not everyone is cut out to help write standards. New standards then emerge - FHIR for example. Now there is talk about how the blockchain will be used for the "provenance" of information. Who owns my health data, me, my doctor, or the data miners?

My own conclusion here is mostly about usability. Collecting our own personal health data should be like an ongoing construction or renovation project where the tools are easily accessible. Are we not building the virtual self? Log ins to health records are cumbersome - so is typing up the data. Just let the healthcare system do it? We have to be able to better track and record our ongoing health concerns - with or without the healthcare system. I also think we need artificial intelligence in the health record in our social media to tell us when to do things, based on our profiles and our precision medicine disposition. Out of nowhere, we should get a suggestion to get a shingles vaccination!

Spiritual Machines

Meditation to me is a form of health technology, and my teachers were like physicians who prescribed the daily practices for my own benefit, and the benefit of all sentient beings. Experimenting with the Muse EEG headband which is designed to induce or teach one how to enter a meditative state was a highlight not only of my blog posting, but of my own meditation experiences. Though I learned meditation in a long, hard, and traditional kind of way, I truly value the potential for technologies like the Muse. Talking AI or virtual doctors aside, exploring our own calm states of mind is going to make us better people in the long run. For then, we will know what we know, and what we don't know.

A little while ago something I read inspired me to think about writing the ebook version of the "ehealth enabled browser" that I have run here on the earthspiritendless URL at blogspot.ca Turns out I may have received more inspiration than the perspiration necessary to do that. For the time being, I will settle for writing this post. This will try to encapsulate what I think I have learned by following digital health during this blog experience. To start off - let me try to explain the significance of the title of this post - eHealth forever or technology forever?

I saw a TED talk where I heard that essentially "technology lives forever" (Kevin Kelly - How Technology Evolves). To illustrate this point the presenter used the example of a steel plow, the kind our ancestors not so long ago pulled behind horses or oxen. A schematic or blueprint of this technology would allow anyone with the technology to replicate it - in essence, bringing it back to life. The technology will last many hundreds of years and would still exist in some less than functional form even after the warranty expires. When it is totally broken, you create another one. Maybe the most difficult thing is just preserving the knowledge and information to manufacture it. Well, biological beings might appear to be in the same category - cloning DNA - but let's face it, we break down more permanently than the technology we have created. Which leads me to the URL name for this blog - earthspiritendless. The final word is going to be that none of this matters and that only the Earth abides. Nothing lasts forever.

I can't remember why I named this blog URL earthspiritendless when it was supposed to be about digital health and the study of health informatics. The title of the blog - "an ehealth enabled browser" - suggests a blog about someone "browsing" or reading about ehealth. The URL name actually comes from the English translation for a Tibetan name a Tibetan Rinpoche (reincarnated Lama) gave me in Bodhgaya, India. It is not a riff on the sports wear company that makes earthspirit brand running shoes. I always did have some fear that the company would track me down and accuse me of infringing on their brand or something. The fact of the matter is that there is no connection between ehealth and the Tibetan "nom de religion".

Since eHealth has a computer science focus there is always going to be an attraction to future technologies - for as we know - technology evolves. If you want to try to follow where computer technology is going in the future, there would be no better futurologist to consult than Ray Kurzweil, currently Chief Engineer at Google. It was by reading his books and starting this blog that I began to see a convergence in the ideas of transhumanism, the singularity, and health informatics - a future where we need to learn how about the role of the health care system along with life extension concepts and technologies. I also read his weekly Accelerating Intelligence reports on new discoveries in science and technology, and have a link to it on the blog.

In the field of eHealth itself one of my overall impressions is the continual need for research and systematic reviews on the efficacy of eHealth for improving health and quality of life, as well as a return on investment. The latter just means an improvement in the quality of life. This is where the great service of such academic venues as the Journal of Medical Internet Research is focused. If I was to go back 7 years with a serious intent to study eHealth - toward a PhD for example - I would be busy reading, saving and studying journal articles. eHealth is a business, computer science, and health science interdisciplinary work, and it is always important to keep that in mind when reading and assessing journal articles.

I suppose if I was to generalize about what I have reviewed in digital health into categories of most interest to me and this blog, I could come up with something like this:

- Careers

- Ethics of Technology

- Life Extension

- Personal Health Records - Toward the Quantified Self

- Spiritual machines

A spiritual master was once asked what is man's greatest need and the answer was "having work to do". Sorry I don't have a reference for that or even if I have reworded that correctly, but it really means that we find no real meaning in life unless we do work. Health Informatics as an educational program is an applied field where internships are developed, so it is career oriented from the beginning. One reason I studied it was the possibility of making a career change into what I perceived was an exciting field that had many new developments on the horizon. This blog was never going to provide me with an income from the google ads ( I made enough to buy a few cups of coffee so thanks for those clicks!). I once thought of extending it as a possible business and I secured an URL called ehealthenabled.ca with the intent of developing a site/service for ways of empowering people to use ehealth technology.

That ehealthenabled.ca site didn't run for longer than a year, and I used it mostly to explore again web development in the suite of web hosting software one finds in Control Panel. I learned that WordPress is better than blogspot for creating content. My problem was that I didn't really know what kind of content to bring to market. I had a vague sort of idea that what we need for public and preventative health were ehealth technology "garages" in every neighbourhood. When cars were mechanically breaking down all the time, every neighbourhood had a repair garage - all gone now as pumping stations have consolidated and cars no longer break down as frequently. These self-service or consultant oriented ehealth stations would also have exercise equipment and all kinds of mobile ehealth technology available, including DIY ultrasound, tDCS etc - after working through the health, safety and privacy concerns of course. We know now how important exercise is for health and having access to resistance training equipment -and/or health coaches - is a fundamental health technology.

The other and perhaps most interesting aspect about an eHealth career is the current potential for entrepeneurship, start-ups, and innovation. eHealth is an applied field, an application of ideas and technologies to solve ever changing and challenging problems in healthcare. I have participated in several Health Hackathons and it would have been great to get involved in some of these types of activities a lot earlier. I would also like to turn the clock back a few more years so I wouldn't miss the mobile app programming bus! Knew that one was coming - did nothing much about it.

Ethics of Technology

Since I work professionally in an ethics related career ( university research ethics) I naturally have had an interest in technology and ethics. For many years I was more interested in bioethics generally and have some courses and conferences under my belt (including a conference presentation on RFID privacy and security concerns in Healthcare). In the past several years there has been a strong shift towards just focusing on the ethics of new technologies and I trace this back when Demi Hassabis sold his DeepMind artificial intelligence gaming software company to Google. Forming an ethics technology committee was a condition of the sale to Google. There is relevance to eHealth a lot here because at Google, Deep Mind went on to develop Alpha-Go the AI that defeated the best Go players in the world. Alpha-Go is also being used in Healthcare, much like IBM's Watson.

There is a really comprehensive research group that also has an open source journal called the Institute for Ethics and Emerging Technologies - https://ieet.org/. It is interesting to follow this group. I once tried to interest them in publishing an essay I wrote about Steve Mann but I ended up posting it on my Linkedin page - a version of it at least.

Life Extension

I think I only seriously became interested in how life extension related to eHealth by reading Ray Kurzweil. Medicine is more and more becoming an information science apparently. I think the corner was turned on that once medical reference libraries went digital. Living forever is a serious science fiction theme but if Ray is right and exponential changes is happening in computer power, discoveries in science are going to accelerate. The idea that we should be trying to stay healthy to live longer is not new, but the idea that we should seriously try to stay healthy in order to possibly benefit by new life extension technologies that will be available after the singularity - in 2030? - certainly is a new deck of cards. The movie Elysium, one of probably a thousand or so that explore life extension ideas in science fiction had a credible healthcare technology that could cure any disease.

Is this something I personally want and help strive to attain? Something like this is a foundational and massively transformational (thank you Peter Diamandis for that concept) movement and revolution in healthcare where the ethics of maintaining quality of life is so vital. What if we as individuals don't have a choice for how long we are going to live if even the dictates of healthcare ethics say we have to be preserved in some form of silicon based artificial intelligence while our biological DNA is being reprogrammed for cellular regeneration. Maybe it will just come down to a duty to care?

Another spiritual master was asked what was the secret to his longevity and health and he replied "Living off the interest of my investments". Sorry - no reference for that anywhere on the Internet at the moment. Maybe I heard that before the internet.

Personal Health Records - Toward the Quantified Self

The ehealth enabled blog explored a lot studies about personal health records. An aspect tangential to that is a concept called the "quantified self". Will collecting a lot of health data in a "do it yourself" sort of way help save us? I found it interesting to read about experiences with fitbit heart rate data, facebook posts on personal health issues, and other such patient lead data collecting activities, that have resulted in some life saving measures.

The really protracted issues that never seem to go away are the problems with data interoperability. It is hard to join an HL7 committee and help advance the work of interoperability (tried that). Not everyone is cut out to help write standards. New standards then emerge - FHIR for example. Now there is talk about how the blockchain will be used for the "provenance" of information. Who owns my health data, me, my doctor, or the data miners?

My own conclusion here is mostly about usability. Collecting our own personal health data should be like an ongoing construction or renovation project where the tools are easily accessible. Are we not building the virtual self? Log ins to health records are cumbersome - so is typing up the data. Just let the healthcare system do it? We have to be able to better track and record our ongoing health concerns - with or without the healthcare system. I also think we need artificial intelligence in the health record in our social media to tell us when to do things, based on our profiles and our precision medicine disposition. Out of nowhere, we should get a suggestion to get a shingles vaccination!

Spiritual Machines

Meditation to me is a form of health technology, and my teachers were like physicians who prescribed the daily practices for my own benefit, and the benefit of all sentient beings. Experimenting with the Muse EEG headband which is designed to induce or teach one how to enter a meditative state was a highlight not only of my blog posting, but of my own meditation experiences. Though I learned meditation in a long, hard, and traditional kind of way, I truly value the potential for technologies like the Muse. Talking AI or virtual doctors aside, exploring our own calm states of mind is going to make us better people in the long run. For then, we will know what we know, and what we don't know.

Friday, January 13, 2017

Brilliant Article by Susan Schneider - It may not feel like anything to be an alien

http://www.kurzweilai.net/it-may-not-feel-like-anything-to-be-an-alien

This was one of most well written and interesting articles I read all year. You don't necessarily need to have seen the movie Arrival to appreciate it:

By Susan Schneider

By Susan Schneider

Humans are probably not the greatest intelligences in the universe. Earth is a relatively young planet and the oldest civilizations could be billions of years older than us. But even on Earth, Homo sapiens may not be the most intelligent species for that much longer.

The world Go, chess, and Jeopardy champions are now all AIs. AI is projected to outmode many human professions within the next few decades. And given the rapid pace of its development, AI may soon advance to artificial general intelligence—intelligence that, like human intelligence, can combine insights from different topic areas and display flexibility and common sense. From there it is a short leap to superintelligent AI, which is smarter than humans in every respect, even those that now seem firmly in the human domain, such as scientific reasoning and social skills. Each of us alive today may be one of the last rungs on the evolutionary ladder that leads from the first living cell to synthetic intelligence.

What we are only beginning to realize is that these two forms of superhuman intelligence—alien and artificial—may not be so distinct. The technological developments we are witnessing today may have all happened before, elsewhere in the universe. The transition from biological to synthetic intelligence may be a general pattern, instantiated over and over, throughout the cosmos. The universe’s greatest intelligences may be postbiological, having grown out of civilizations that were once biological. (This is a view I share with Paul Davies, Steven Dick, Martin Rees, and Seth Shostak, among others.) To judge from the human experience—the only example we have—the transition from biological to postbiological may take only a few hundred years.

I prefer the term “postbiological” to “artificial” because the contrast between biological and synthetic is not very sharp. Consider a biological mind that achieves superintelligence through purely biological enhancements, such as nanotechnologically enhanced neural minicolumns. This creature would be postbiological, although perhaps many wouldn’t call it an “AI.” Or consider a computronium that is built out of purely biological materials, like the Cylon Raider in the reimagined Battlestar Galactica TV series.

The key point is that there is no reason to expect humans to be the highest form of intelligence there is. Our brains evolved for specific environments and are greatly constrained by chemistry and historical contingencies. But technology has opened up a vast design space, offering new materials and modes of operation, as well as new ways to explore that space at a rate much faster than traditional biological evolution. And I think we already see reasons why synthetic intelligence will outperform us.

An extraterrestrial AI could have goals that conflict with those of biological life

Silicon microchips already seem to be a better medium for information processing than groups of neurons. Neurons reach a peak speed of about 200 hertz, compared to gigahertz for the transistors in current microprocessors. Although the human brain is still far more intelligent than a computer, machines have almost unlimited room for improvement. It may not be long before they can be engineered to match or even exceed the intelligence of the human brain through reverse-engineering the brain and improving upon its algorithms, or through some combination of reverse engineering and judicious algorithms that aren’t based on the workings of the human brain.

In addition, an AI can be downloaded to multiple locations at once, is easily backed up and modified, and can survive under conditions that biological life has trouble with, including interstellar travel. Our measly brains are limited by cranial volume and metabolism; superintelligent AI, in stark contrast, could extend its reach across the Internet and even set up a Galaxy-wide computronium, utilizing all the matter within our galaxy to maximize computations. There is simply no contest. Superintelligent AI would be far more durable than us.

Suppose I am right. Suppose that intelligent life out there is postbiological. What should we make of this? Here, current debates over AI on Earth are telling. Two of the main points of contention—the so-called control problem and the nature of subjective experience—affect our understanding of what other alien civilizations may be like, and what they may do to us when we finally meet.

Ray Kurzweil takes an optimistic view of the postbiological phase of evolution, suggesting that humanity will merge with machines, reaching a magnificent technotopia. But Stephen Hawking, Bill Gates, Elon Musk, and others have expressed the concern that humans could lose control of superintelligent AI, as it can rewrite its own programming and outthink any control measures that we build in. This has been called the “control problem”—the problem of how we can control an AI that is both inscrutable and vastly intellectually superior to us.

Superintelligent AI could be developed during a technological

singularity, an abrupt transition when ever-more-rapid technological

advances—especially an intelligence explosion—slip beyond our ability to

predict or understand. But even if such an intelligence arises in less

dramatic fashion, there may be no way for us to predict or control its

goals. Even if we could decide on what moral principles to build into

our machines, moral programming is difficult to specify in a foolproof

way, and such programming could be rewritten by a superintelligence in

any case. A clever machine could bypass safeguards, such as kill

switches, and could potentially pose an existential threat to biological

life. Millions of dollars are pouring into organizations devoted to AI

safety. Some of the finest minds in computer science are working on this

problem. They will hopefully create safe systems, but many worry that

the control problem is insurmountable.

In light of this, contact with an alien intelligence may be even more dangerous than we think. Biological aliens might well be hostile, but an extraterrestrial AI could pose an even greater risk. It may have goals that conflict with those of biological life, have at its disposal vastly superior intellectual abilities, and be far more durable than biological life.

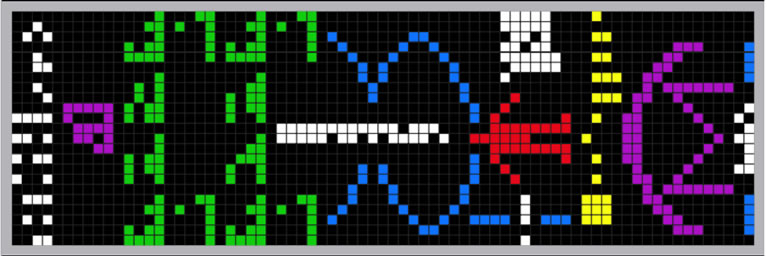

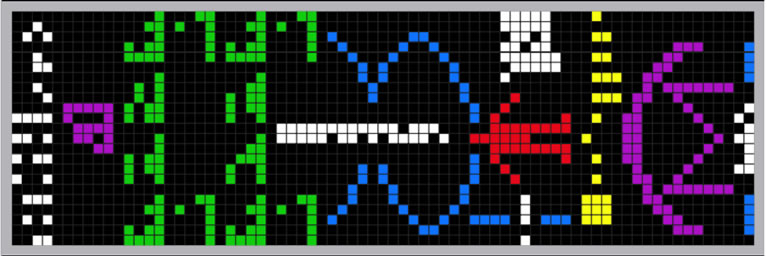

That argues for caution with so-called Active SETI, in which we do not just passively listen for signals from other civilizations, but deliberately advertise our presence. In the most famous example, in 1974 Frank Drake and Carl Sagan used the giant dish-telescope in Arecibo, Puerto Rico, to send a message to a star cluster. Advocates of Active SETI hold that, instead of just passively listening for signs of extraterrestrial intelligence, we should be using our most powerful radio transmitters, such as Arecibo, to send messages in the direction of the stars that are nearest to Earth.

Why would nonconscious machines have the same value we place on biological intelligence?

Such a program strikes me as reckless when one considers the control problem. Although a truly advanced civilization would likely have no interest in us, even one hostile civilization among millions could be catastrophic. Until we have reached the point at which we can be confident that superintelligent AI does not pose a threat to us, we should not call attention to ourselves. Advocates of Active SETI point out that our radar and radio signals are already detectable, but these signals are fairly weak and quickly blend with natural galactic noise. We would be playing with fire if we transmitted stronger signals that were intended to be heard.

The safest mindset is intellectual humility. Indeed, barring blaringly obvious scenarios in which alien ships hover over Earth, as in the recent film Arrival, I wonder if we could even recognize the technological markers of a truly advanced superintelligence. Some scientists project that superintelligent AIs could feed off black holes or create Dyson Spheres, megastructures that harnesses the energy of entire stars. But these are just speculations from the vantage point of our current technology; it’s simply the height of hubris to claim that we can foresee the computational abilities and energy needs of a civilization millions or even billions of years ahead of our own.

Some of the first superintelligent AIs could have cognitive systems that are roughly modeled after biological brains—the way, for instance, that deep learning systems are roughly modeled on the brain’s neural networks. Their computational structure might be comprehensible to us, at least in rough outlines. They may even retain goals that biological beings have, such as reproduction and survival.

But superintelligent AIs, being self-improving, could quickly morph into an unrecognizable form. Perhaps some will opt to retain cognitive features that are similar to those of the species they were originally modeled after, placing a design ceiling on their own cognitive architecture. Who knows? But without a ceiling, an alien superintelligence could quickly outpace our ability to make sense of its actions, or even look for it. Perhaps it would even blend in with natural features of the universe; perhaps it is in dark matter itself, as Caleb Scharf recently speculated.

An advocate of Active SETI will point out that this is precisely why

we should send signals into space—let them find us, and let them design

means of contact they judge to be tangible to an intellectually inferior

species like us. While I agree this is a reason to consider Active

SETI, the possibility of encountering a dangerous superintelligence

outweighs it. For all we know, malicious superintelligences could infect

planetary AI systems with viruses, and wise civilizations build

cloaking devices. We humans may need to reach our own singularity before

embarking upon Active SETI. Our own superintelligent AIs will be able

to inform us of the prospects for galactic AI safety and how we would go

about recognizing signs of superintelligence elsewhere in the universe.

It takes one to know one.

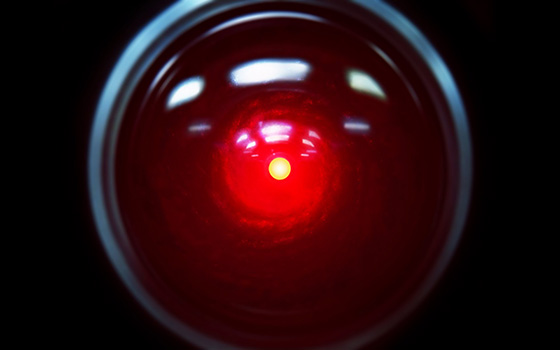

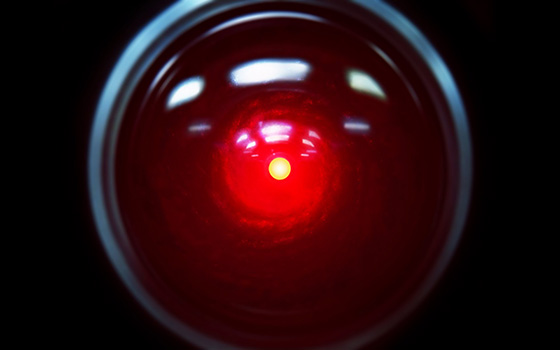

It is natural to wonder whether all this means that humans should avoid developing sophisticated AI for space exploration; after all, recall the iconic HAL in 2001: A Space Odyssey. Considering a future ban on AI in space would be premature, I believe. By the time humanity is able to investigate the universe with its own AIs, we humans will likely have reached a tipping point. We will have either already lost control of AI—in which case space projects initiated by humans will not even happen—or achieved a firmer grip on AI safety. Time will tell.

Raw intelligence is not the only issue to worry about. Normally, we expect that if we encountered advanced alien intelligence, we would likely encounter creatures with very different biologies, but they would still have minds like ours in an important sense—there would be something it is like, from the inside, to be them. Consider that every moment of your waking life, and whenever you are dreaming, it feels like something to be you. When you see the warm hues of a sunrise, or smell the aroma of freshly baked bread, you are having conscious experience. Likewise, there is also something that it is like to be an alien—or so we commonly assume. That assumption needs to be questioned though. Would superintelligent AIs even have conscious experience and, if they did, could we tell? And how would their inner lives, or lack thereof, impact us?

The question of whether AIs have an inner life is key to how we value their existence. Consciousness is the philosophical cornerstone of our moral systems, being key to our judgment of whether someone or something is a self or person rather than a mere automaton. And conversely, whether they are conscious may also be key to how they value us. The value an AI places on us may well hinge on whether it has an inner life; using its own subjective experience as a springboard, it could recognize in us the capacity for conscious experience. After all, to the extent we value the lives of other species, we value them because we feel an affinity of consciousness—thus most of us recoil from killing a chimp, but not from munching on an apple.

But how can beings with vast intellectual differences and that are made of different substrates recognize consciousness in each other? Philosophers on Earth have pondered whether consciousness is limited to biological phenomena. Superintelligent AI, should it ever wax philosophical, could similarly pose a “problem of biological consciousness” about us, asking whether we have the right stuff for experience.

Who knows what intellectual path a superintelligence would take to tell whether we are conscious. But for our part, how can we humans tell whether an AI is conscious? Unfortunately, this will be difficult. Right now, you can tell you are having experience, as it feels like something to be you. You are your own paradigm case of conscious experience. And you believe that other people and certain nonhuman animals are likely conscious, for they are neurophysiologically similar to you. But how are you supposed to tell whether something made of a different substrate can have experience?

Consider, for instance, a silicon-based superintelligence. Although

both silicon microchips and neural minicolumns process information, for

all we now know they could differ molecularly in ways that impact

consciousness. After all, we suspect that carbon is chemically more

suitable to complex life than silicon is. If the chemical differences

between silicon and carbon impact something as important as life itself,

we should not rule out the possibility that the chemical differences

also impact other key functions, such as whether silicon gives rise to

consciousness.

Consider, for instance, a silicon-based superintelligence. Although

both silicon microchips and neural minicolumns process information, for

all we now know they could differ molecularly in ways that impact

consciousness. After all, we suspect that carbon is chemically more

suitable to complex life than silicon is. If the chemical differences

between silicon and carbon impact something as important as life itself,

we should not rule out the possibility that the chemical differences

also impact other key functions, such as whether silicon gives rise to

consciousness.

The conditions required for consciousness are actively debated by AI researchers, neuroscientists, and philosophers of mind. Resolving them might require an empirical approach that is informed by philosophy—a means of determining, on a case-by-case basis, whether an information-processing system supports consciousness, and under what conditions.

Here’s a suggestion, a way we can at least enhance our understanding of whether silicon supports consciousness. Silicon-based brain chips are already under development as a treatment for various memory-related conditions, such as Alzheimer’s and post-traumatic stress disorder. If, at some point, chips are used in areas of the brain responsible for conscious functions, such as attention and working memory, we could begin to understand whether silicon is a substrate for consciousness. We might find that replacing a brain region with a chip causes a loss of certain experience, like the episodes that Oliver Sacks wrote about. Chip engineers could then try a different, non-neural, substrate, but they may eventually find that the only “chip” that works is one that is engineered from biological neurons. This procedure would serve as a means of determining whether artificial systems can be conscious, at least when they are placed in a larger system that we already believe is conscious.

Even if silicon can give rise to consciousness, it might do so only in very specific circumstances; the properties that give rise to sophisticated information processing (and which AI developers care about) may not be the same properties that yield consciousness. Consciousness may require consciousness engineering—a deliberate engineering effort to put consciousness in machines.

Here’s my worry. Who, on Earth or on distant planets, would aim to engineer consciousness into AI systems themselves? Indeed, when I think of existing AI programs on Earth, I can see certain reasons why AI engineers might actively avoid creating conscious machines.

Robots are currently being designed to take care of the elderly in Japan, clean up nuclear reactors, and fight our wars. Naturally, the question has arisen: Is it ethical to use robots for such tasks if they turn out to be conscious? How would that differ from breeding humans for these tasks? If I were an AI director at Google or Facebook, thinking of future projects, I wouldn’t want the ethical muddle of inadvertently designing a sentient system. Developing a system that turns out to be sentient could lead to accusations of robot slavery and other public-relations nightmares, and it could even lead to a ban on the use of AI technology in the very areas the AI was designed to be used in. A natural response to this is to seek architectures and substrates in which robots are not conscious.

Further, it may be more efficient for a self-improving superintelligence to eliminate consciousness. Think about how consciousness works in the human case. Only a small percentage of human mental processing is accessible to the conscious mind. Consciousness is correlated with novel learning tasks that require attention and focus. A superintelligence would possess expert-level knowledge in every domain, with rapid-fire computations ranging over vast databases that could include the entire Internet and ultimately encompass an entire galaxy. What would be novel to it? What would require slow, deliberative focus? Wouldn’t it have mastered everything already? Like an experienced driver on a familiar road, it could rely on nonconscious processing. The simple consideration of efficiency suggests, depressingly, that the most intelligent systems will not be conscious. On cosmological scales, consciousness may be a blip, a momentary flowering of experience before the universe reverts to mindlessness.

If people suspect that AI isn’t conscious, they will likely view the suggestion that intelligence tends to become postbiological with dismay. And it heightens our existential worries. Why would nonconscious machines have the same value we place on biological intelligence, which is conscious?

Soon, humans will no longer be the measure of intelligence on Earth. And perhaps already, elsewhere in the cosmos, superintelligent AI, not biological life, has reached the highest intellectual plateaus. But perhaps biological life is distinctive in another significant respect—conscious experience. For all we know, sentient AI will require a deliberate engineering effort by a benevolent species, seeking to create machines that feel. Perhaps a benevolent species will see fit to create their own AI mindchildren. Or perhaps future humans will engage in some consciousness engineering, and send sentience to the stars.

SUSAN SCHNEIDER is an associate professor of philosophy and cognitive science at the University of Connecticut and an affiliated faculty member at the Institute for Advanced Study, the Center of Theological Inquiry, YHouse, and the Ethics and Technology Group at Yale’s Interdisciplinary Center of Bioethics. She has written several books, including Science Fiction and Philosophy: From Time Travel to Superintelligence. For more information, visit SchneiderWebsite.com.

This was one of most well written and interesting articles I read all year. You don't necessarily need to have seen the movie Arrival to appreciate it:

It may not feel like anything to be an alien

December 23, 2016

An alien message in Arrival movie (dredit: Paramount Pictures)

Humans are probably not the greatest intelligences in the universe. Earth is a relatively young planet and the oldest civilizations could be billions of years older than us. But even on Earth, Homo sapiens may not be the most intelligent species for that much longer.

The world Go, chess, and Jeopardy champions are now all AIs. AI is projected to outmode many human professions within the next few decades. And given the rapid pace of its development, AI may soon advance to artificial general intelligence—intelligence that, like human intelligence, can combine insights from different topic areas and display flexibility and common sense. From there it is a short leap to superintelligent AI, which is smarter than humans in every respect, even those that now seem firmly in the human domain, such as scientific reasoning and social skills. Each of us alive today may be one of the last rungs on the evolutionary ladder that leads from the first living cell to synthetic intelligence.

What we are only beginning to realize is that these two forms of superhuman intelligence—alien and artificial—may not be so distinct. The technological developments we are witnessing today may have all happened before, elsewhere in the universe. The transition from biological to synthetic intelligence may be a general pattern, instantiated over and over, throughout the cosmos. The universe’s greatest intelligences may be postbiological, having grown out of civilizations that were once biological. (This is a view I share with Paul Davies, Steven Dick, Martin Rees, and Seth Shostak, among others.) To judge from the human experience—the only example we have—the transition from biological to postbiological may take only a few hundred years.

I prefer the term “postbiological” to “artificial” because the contrast between biological and synthetic is not very sharp. Consider a biological mind that achieves superintelligence through purely biological enhancements, such as nanotechnologically enhanced neural minicolumns. This creature would be postbiological, although perhaps many wouldn’t call it an “AI.” Or consider a computronium that is built out of purely biological materials, like the Cylon Raider in the reimagined Battlestar Galactica TV series.

The key point is that there is no reason to expect humans to be the highest form of intelligence there is. Our brains evolved for specific environments and are greatly constrained by chemistry and historical contingencies. But technology has opened up a vast design space, offering new materials and modes of operation, as well as new ways to explore that space at a rate much faster than traditional biological evolution. And I think we already see reasons why synthetic intelligence will outperform us.

An extraterrestrial AI could have goals that conflict with those of biological life

Silicon microchips already seem to be a better medium for information processing than groups of neurons. Neurons reach a peak speed of about 200 hertz, compared to gigahertz for the transistors in current microprocessors. Although the human brain is still far more intelligent than a computer, machines have almost unlimited room for improvement. It may not be long before they can be engineered to match or even exceed the intelligence of the human brain through reverse-engineering the brain and improving upon its algorithms, or through some combination of reverse engineering and judicious algorithms that aren’t based on the workings of the human brain.

In addition, an AI can be downloaded to multiple locations at once, is easily backed up and modified, and can survive under conditions that biological life has trouble with, including interstellar travel. Our measly brains are limited by cranial volume and metabolism; superintelligent AI, in stark contrast, could extend its reach across the Internet and even set up a Galaxy-wide computronium, utilizing all the matter within our galaxy to maximize computations. There is simply no contest. Superintelligent AI would be far more durable than us.

Suppose I am right. Suppose that intelligent life out there is postbiological. What should we make of this? Here, current debates over AI on Earth are telling. Two of the main points of contention—the so-called control problem and the nature of subjective experience—affect our understanding of what other alien civilizations may be like, and what they may do to us when we finally meet.

Ray Kurzweil takes an optimistic view of the postbiological phase of evolution, suggesting that humanity will merge with machines, reaching a magnificent technotopia. But Stephen Hawking, Bill Gates, Elon Musk, and others have expressed the concern that humans could lose control of superintelligent AI, as it can rewrite its own programming and outthink any control measures that we build in. This has been called the “control problem”—the problem of how we can control an AI that is both inscrutable and vastly intellectually superior to us.

“I’m

sorry, Dave” — HAL in 2001: A Space Odyssey. If you think intelligent

machines are dangerous, imagine what intelligent extraterrestrial

machines could do. (credit: YouTube/Warner Bros.)

In light of this, contact with an alien intelligence may be even more dangerous than we think. Biological aliens might well be hostile, but an extraterrestrial AI could pose an even greater risk. It may have goals that conflict with those of biological life, have at its disposal vastly superior intellectual abilities, and be far more durable than biological life.

That argues for caution with so-called Active SETI, in which we do not just passively listen for signals from other civilizations, but deliberately advertise our presence. In the most famous example, in 1974 Frank Drake and Carl Sagan used the giant dish-telescope in Arecibo, Puerto Rico, to send a message to a star cluster. Advocates of Active SETI hold that, instead of just passively listening for signs of extraterrestrial intelligence, we should be using our most powerful radio transmitters, such as Arecibo, to send messages in the direction of the stars that are nearest to Earth.

Why would nonconscious machines have the same value we place on biological intelligence?

Such a program strikes me as reckless when one considers the control problem. Although a truly advanced civilization would likely have no interest in us, even one hostile civilization among millions could be catastrophic. Until we have reached the point at which we can be confident that superintelligent AI does not pose a threat to us, we should not call attention to ourselves. Advocates of Active SETI point out that our radar and radio signals are already detectable, but these signals are fairly weak and quickly blend with natural galactic noise. We would be playing with fire if we transmitted stronger signals that were intended to be heard.

The safest mindset is intellectual humility. Indeed, barring blaringly obvious scenarios in which alien ships hover over Earth, as in the recent film Arrival, I wonder if we could even recognize the technological markers of a truly advanced superintelligence. Some scientists project that superintelligent AIs could feed off black holes or create Dyson Spheres, megastructures that harnesses the energy of entire stars. But these are just speculations from the vantage point of our current technology; it’s simply the height of hubris to claim that we can foresee the computational abilities and energy needs of a civilization millions or even billions of years ahead of our own.

Some of the first superintelligent AIs could have cognitive systems that are roughly modeled after biological brains—the way, for instance, that deep learning systems are roughly modeled on the brain’s neural networks. Their computational structure might be comprehensible to us, at least in rough outlines. They may even retain goals that biological beings have, such as reproduction and survival.

But superintelligent AIs, being self-improving, could quickly morph into an unrecognizable form. Perhaps some will opt to retain cognitive features that are similar to those of the species they were originally modeled after, placing a design ceiling on their own cognitive architecture. Who knows? But without a ceiling, an alien superintelligence could quickly outpace our ability to make sense of its actions, or even look for it. Perhaps it would even blend in with natural features of the universe; perhaps it is in dark matter itself, as Caleb Scharf recently speculated.

The Arecibo message was broadcast into space a single time, for 3 minutes, in November 1974 (credit: SETI Institute)

It is natural to wonder whether all this means that humans should avoid developing sophisticated AI for space exploration; after all, recall the iconic HAL in 2001: A Space Odyssey. Considering a future ban on AI in space would be premature, I believe. By the time humanity is able to investigate the universe with its own AIs, we humans will likely have reached a tipping point. We will have either already lost control of AI—in which case space projects initiated by humans will not even happen—or achieved a firmer grip on AI safety. Time will tell.

Raw intelligence is not the only issue to worry about. Normally, we expect that if we encountered advanced alien intelligence, we would likely encounter creatures with very different biologies, but they would still have minds like ours in an important sense—there would be something it is like, from the inside, to be them. Consider that every moment of your waking life, and whenever you are dreaming, it feels like something to be you. When you see the warm hues of a sunrise, or smell the aroma of freshly baked bread, you are having conscious experience. Likewise, there is also something that it is like to be an alien—or so we commonly assume. That assumption needs to be questioned though. Would superintelligent AIs even have conscious experience and, if they did, could we tell? And how would their inner lives, or lack thereof, impact us?

The question of whether AIs have an inner life is key to how we value their existence. Consciousness is the philosophical cornerstone of our moral systems, being key to our judgment of whether someone or something is a self or person rather than a mere automaton. And conversely, whether they are conscious may also be key to how they value us. The value an AI places on us may well hinge on whether it has an inner life; using its own subjective experience as a springboard, it could recognize in us the capacity for conscious experience. After all, to the extent we value the lives of other species, we value them because we feel an affinity of consciousness—thus most of us recoil from killing a chimp, but not from munching on an apple.

But how can beings with vast intellectual differences and that are made of different substrates recognize consciousness in each other? Philosophers on Earth have pondered whether consciousness is limited to biological phenomena. Superintelligent AI, should it ever wax philosophical, could similarly pose a “problem of biological consciousness” about us, asking whether we have the right stuff for experience.

Who knows what intellectual path a superintelligence would take to tell whether we are conscious. But for our part, how can we humans tell whether an AI is conscious? Unfortunately, this will be difficult. Right now, you can tell you are having experience, as it feels like something to be you. You are your own paradigm case of conscious experience. And you believe that other people and certain nonhuman animals are likely conscious, for they are neurophysiologically similar to you. But how are you supposed to tell whether something made of a different substrate can have experience?

Westworld (credit: HBO)

The conditions required for consciousness are actively debated by AI researchers, neuroscientists, and philosophers of mind. Resolving them might require an empirical approach that is informed by philosophy—a means of determining, on a case-by-case basis, whether an information-processing system supports consciousness, and under what conditions.

Here’s a suggestion, a way we can at least enhance our understanding of whether silicon supports consciousness. Silicon-based brain chips are already under development as a treatment for various memory-related conditions, such as Alzheimer’s and post-traumatic stress disorder. If, at some point, chips are used in areas of the brain responsible for conscious functions, such as attention and working memory, we could begin to understand whether silicon is a substrate for consciousness. We might find that replacing a brain region with a chip causes a loss of certain experience, like the episodes that Oliver Sacks wrote about. Chip engineers could then try a different, non-neural, substrate, but they may eventually find that the only “chip” that works is one that is engineered from biological neurons. This procedure would serve as a means of determining whether artificial systems can be conscious, at least when they are placed in a larger system that we already believe is conscious.

Even if silicon can give rise to consciousness, it might do so only in very specific circumstances; the properties that give rise to sophisticated information processing (and which AI developers care about) may not be the same properties that yield consciousness. Consciousness may require consciousness engineering—a deliberate engineering effort to put consciousness in machines.

Here’s my worry. Who, on Earth or on distant planets, would aim to engineer consciousness into AI systems themselves? Indeed, when I think of existing AI programs on Earth, I can see certain reasons why AI engineers might actively avoid creating conscious machines.

Robots are currently being designed to take care of the elderly in Japan, clean up nuclear reactors, and fight our wars. Naturally, the question has arisen: Is it ethical to use robots for such tasks if they turn out to be conscious? How would that differ from breeding humans for these tasks? If I were an AI director at Google or Facebook, thinking of future projects, I wouldn’t want the ethical muddle of inadvertently designing a sentient system. Developing a system that turns out to be sentient could lead to accusations of robot slavery and other public-relations nightmares, and it could even lead to a ban on the use of AI technology in the very areas the AI was designed to be used in. A natural response to this is to seek architectures and substrates in which robots are not conscious.

Further, it may be more efficient for a self-improving superintelligence to eliminate consciousness. Think about how consciousness works in the human case. Only a small percentage of human mental processing is accessible to the conscious mind. Consciousness is correlated with novel learning tasks that require attention and focus. A superintelligence would possess expert-level knowledge in every domain, with rapid-fire computations ranging over vast databases that could include the entire Internet and ultimately encompass an entire galaxy. What would be novel to it? What would require slow, deliberative focus? Wouldn’t it have mastered everything already? Like an experienced driver on a familiar road, it could rely on nonconscious processing. The simple consideration of efficiency suggests, depressingly, that the most intelligent systems will not be conscious. On cosmological scales, consciousness may be a blip, a momentary flowering of experience before the universe reverts to mindlessness.

If people suspect that AI isn’t conscious, they will likely view the suggestion that intelligence tends to become postbiological with dismay. And it heightens our existential worries. Why would nonconscious machines have the same value we place on biological intelligence, which is conscious?

Soon, humans will no longer be the measure of intelligence on Earth. And perhaps already, elsewhere in the cosmos, superintelligent AI, not biological life, has reached the highest intellectual plateaus. But perhaps biological life is distinctive in another significant respect—conscious experience. For all we know, sentient AI will require a deliberate engineering effort by a benevolent species, seeking to create machines that feel. Perhaps a benevolent species will see fit to create their own AI mindchildren. Or perhaps future humans will engage in some consciousness engineering, and send sentience to the stars.

SUSAN SCHNEIDER is an associate professor of philosophy and cognitive science at the University of Connecticut and an affiliated faculty member at the Institute for Advanced Study, the Center of Theological Inquiry, YHouse, and the Ethics and Technology Group at Yale’s Interdisciplinary Center of Bioethics. She has written several books, including Science Fiction and Philosophy: From Time Travel to Superintelligence. For more information, visit SchneiderWebsite.com.

Sunday, May 1, 2016

South Korean eHealth Connections

Today's post is about recent random connections between eHealth and South Korea.

I came across a South Korean eHealth company called Health Connect, which is collaboration between one of the largest telecommunication companies SK Telecom and the premier university in South Korea - Seoul University. Seoul University Medical Centre is one of the top NIH funded clinical trial research centres in the world. I like their website design.

I came across a South Korean eHealth company called Health Connect, which is collaboration between one of the largest telecommunication companies SK Telecom and the premier university in South Korea - Seoul University. Seoul University Medical Centre is one of the top NIH funded clinical trial research centres in the world. I like their website design. Earlier last year I was contacted by someone at Samsung Medical Centre about eREB (Research Ethics Board) online systems. They developed one of their own in-house systems for online research ethics review and I have a research interest in "in-house" eREB systems development ( having programmed and designed one myself). Too bad my Korean is still not good enough to understand everything on their website in Korean, even though I lived in Korea for almost 4 years, have a Korean family, and still watch Korean TV dramas every day!

I would like to bring to your attention the prevalence of the Fitbit device. I went to a Health & Safety meeting and noticed that half of the people were wearing Fitbits on their wrists. There are activity competitions with teams at work and people are buying more Fitbits because the cheap pedometers reset unexpectedly and data is lost. A year or so ago I read an article that Fitbit was a fading fad, but that just does not seem to currently be the case. Outside of work, I am seeing more and more people wearing and connecting to these devices. Some days they even look ubiquitous.

Now here is the surprising Korean connection - the President and inventor of the Fitbit, James Park, is Korean!

Now here is the surprising Korean connection - the President and inventor of the Fitbit, James Park, is Korean!One of the major problems I am having now with my Fitbit device is Ubuntu. The Fitbit dongle and sync tracking isn't supported for Linux or Ubuntu. A program called Galileo was written for free by Benoit Allard. I had it working just fine when I was using Ubuntu 14.0 but then I just upgraded to Ubuntu 16 and it isn't working. I am hopeless trying to share two Fitbits on the Mac and the iPad so I really need to try to get this working again on Ubuntu 16 so I can sync and see my online data. I have started to sink my toes into the murky depths of the Ubuntu Galileo setup, without too much hope. Posted the bug on the Allard Galileo website though.

An interesting direction for Googles' Deepmind appeared in the news & I immediately tweeted it out on my eHealth Twitter feed: "Why does Google want British patients' confidential records"? http://ibt.uk/A6XCB

An interesting direction for Googles' Deepmind appeared in the news & I immediately tweeted it out on my eHealth Twitter feed: "Why does Google want British patients' confidential records"? http://ibt.uk/A6XCB I have blogged about Deepmind before < http://earthspiritendless.blogspot.ca/2014/02/ethics-boards-for-googledeepmind-end-of.html >. Deepmind recently was the technology behind Alphago, an artificial intelligence Go program that beat the best Go player in the world. The best Go player in the world is the South Korean Lee, Seodol, fitting for this slightly Korean blog post. It doesn't surprise me that Deepmind is following the way of IBM and Watson, using AI to find discoveries using big health data.

Well that is the South Korean eHealth Connection for now.

Tuesday, August 11, 2015

Humans TV Series Reviews on IEEG

I noticed two reviews on the Institute for Ethics & Emerging Technology on the new TV Series "Humans".

Spoiler alert in effect, but here is the review by Adrian Cull.

Here is the review by Nicole Sallak Anderson.

I have not seen the TV series but I think the ideas for this kind of science fiction were mined from the film directed by Steven Spielberg in 2001 called "AI".

As one reviewer for the Humans TV series says, it is good that the public is starting to think about the ethics of this technology.

Tuesday, February 11, 2014

Ethics boards for Google/Deepmind: The end of computer programming?

Hat tip to the folks on the LinkedIn CAREB group who posted this story from Forbes "Inside Google's Mysterious Ethics Board". OK. Here is my initial impression. The ethics surrounding new technology is becoming as serious as stem cell bioethics. One of the authors of Forbes article also contributes to the Institute for Ethics and Emerging Technology - appropriately.

It was actually an Artificial Intelligence (AI) company that Google bought called Deepmind, that insisted on the technology ethics board as a condition of the sale. More about the founder of that company, Demis Hassabis is interesting to follow. This "technology ethics board" is not, I think, at all the same as an Institutional Review Board, or Research Ethics Board. It is more of an internal ethics review committee, probably examining agile developments of new technology. Might just be corporate whitewash, or it might actually be driven by social and moral responsibility, as well as a dash of liability insurance, to paraphrase the IEET author.

Deepmind, which has the most minimalistic website I have ever seen, is advancing AI into computers that can learn and program themselves. Must be the vanguard to the end of programming, as current Brain research is predicting. Try reading this paper about how Deepmind programmed a computer to win Atari games "Playing Atari with Deep Reinforcement Learning". Understand now why programming might come to an end when computers learn how to program themselves?

What possible relevance could this have for ehealth, as is the primary purpose of this blog? Well, as this article on Recode says about the founder of Deepmind: "(Demis) Hassabis has closely studied how the brain functions — particularly the hippocampus, which is associated with memory — and worked on algorithms that closely model these natural processes." Apparently, the Journal Science says this research was one of the top scientific breakthroughs one year (this from Wikipedia):

Still, that really isn't about health informatics really. Sorry. Except if the ethics of new technology in health and medicine is important? There is a real intersection I believe between health informatics and health technology assessment.

It was actually an Artificial Intelligence (AI) company that Google bought called Deepmind, that insisted on the technology ethics board as a condition of the sale. More about the founder of that company, Demis Hassabis is interesting to follow. This "technology ethics board" is not, I think, at all the same as an Institutional Review Board, or Research Ethics Board. It is more of an internal ethics review committee, probably examining agile developments of new technology. Might just be corporate whitewash, or it might actually be driven by social and moral responsibility, as well as a dash of liability insurance, to paraphrase the IEET author.

Deepmind, which has the most minimalistic website I have ever seen, is advancing AI into computers that can learn and program themselves. Must be the vanguard to the end of programming, as current Brain research is predicting. Try reading this paper about how Deepmind programmed a computer to win Atari games "Playing Atari with Deep Reinforcement Learning". Understand now why programming might come to an end when computers learn how to program themselves?

What possible relevance could this have for ehealth, as is the primary purpose of this blog? Well, as this article on Recode says about the founder of Deepmind: "(Demis) Hassabis has closely studied how the brain functions — particularly the hippocampus, which is associated with memory — and worked on algorithms that closely model these natural processes." Apparently, the Journal Science says this research was one of the top scientific breakthroughs one year (this from Wikipedia):

Hassabis then left the video game industry, switching to cognitive neuroscience. Working in the field of autobiographical memory and amnesia he authored several influential papers.[14] The paper argued that patients with damage to their hippocampus, known to cause amnesia, were also unable to imagine themselves in new experiences. Importantly this established a link between the constructive process of imagination and the reconstructive process of episodic memory recall. Based on these findings and a follow-up fMRI study,[15] Hassabis developed his ideas into a new theoretical account of the episodic memory system identifying scene construction, the generation and online maintenance of a complex and coherent scene, as a key process underlying both memory recall and imagination.[16] This work was widely covered in the mainstream media[17] and was listed in the top 10 scientific breakthroughs of the year (at number 9) in any field by the journal Science.[1

Still, that really isn't about health informatics really. Sorry. Except if the ethics of new technology in health and medicine is important? There is a real intersection I believe between health informatics and health technology assessment.

Sunday, December 1, 2013

IBM's Watson now an API for cloud development - is there a Doctor in the House?

I am still trying to pin down a focus on eHealth application for this, but IBM has opened an API (application program interface) for the Watson cognitive computing intelligence. This sounds like developers can open up smartphone applications to query the cognitive fireworks of the Watson computer that defeated the best humans in the world of TV game Jeopardy.

For eHealth, the API needs to tap into the right data content. IBM already has several services for this:

MD Buyline: This provider of supply chain solutions for hospitals and healthcare systems is developing an app to allow clinical and financial users to make real-time, informed decisions about medical device purchases, to improve quality, value, outcomes and patient satisfaction.Hippocrates powered by IBM Watson will provide users with access to a helpful research assistant that provides fast, evidence based recommendations from a wealth of data, to help ensure medical organizations are making the best decisions for their physicians' and patients' needs.Guess there's not much else you could ask for? But yes there is - Welltok:

Welltok: A pioneer in the emerging field of Social Health Management™, Welltok is developing an app that will create Intelligent Health Itineraries™ for consumers. These personalized itineraries, sponsored by health plans, health systems and health retailers, will include tailored activities, relevant content and condition management programs, and will reward users for engaging in healthy behaviors. Consumers who use Welltok's app -- CaféWell Concierge powered by IBM Watson – will participate in conversations about their health with Watson. By leveraging Watson's ability to learn from every interaction, the app will offer insights tailored to each individual’s health needs.

And there is more. Watson Path is diagnostic education program, and perhaps even a clinical decision support aid for diagnosis? All this from a game of Jeopardy?

Tuesday, April 16, 2013

Brain research projects - no more digital computers or programming!?

There are several huge "artificial brain" research projects going on now. There is one in Israel, United States (biggest NIH research grant in history), and the one in Europe is called the Blue Brain Project. One of the leading directors of the BBP is Henry Markham. I was listening to an interview with him in which he stated that within 10 years, once the virtual brain is created, it will mean computers will no longer need to be programmed - it would mean the end of the line for digital computers! These computers would not need to be programmed because they will have ability to learn by themselves. This really astonishes me. He further stated that the desktop computers in the future will be both digital and artificial brain.

The eHealth implications for the BBP are astronomical. At first the goal of such a virtual brain would be simulations to test drug reactions for Parkinsons or Alzheimers. Of course, those are more translational bioinformatic type of applications, but it would mean that every ordinary computer device would have access to a Dr. Watson type of medical intelligence.

In the spirit of this movement towards neuroscience integration of knowledge and huge research, I am reading Ray Kuzweil's new book "How to Create a Mind: The Secret of Human Thought Revealed". Parts of the book are beyond my ken, especially the chapters describing how the brain grid is constructed and how it works, but I like reading Kurzweil because his theories of the evolution of computers is compelling. Not everyone appreciates the Kuzweil vision, and I found very humorous a review of Kuzweil by Don Tapscott in the Globe and Mail where he quotes a detractor of his writings:

He also has many detractors. Douglas Hofstadter, the Pulitzer Prize-winning author of Gödel, Escher, Bach, once said that Kurzweil's books are “a very bizarre mixture of ideas that are solid and good with ideas that are crazy. It's as if you took a lot of very good food and some dog excrement and blended it all up so that you can't possibly figure out what's good or bad.”I have to return "How to Create a Mind" to the library now, but I almost finished. Can't say I completely understand the "hidden Markov models". I also don't fully agree with Hofstader. Kurzweil even has quotes from Albus Dumdeldore and one of the Weasley clan, and I don't think that detracts from the scholarly work. Many times throughout reading the book I get the feeling that the book was written for both a human and a computer audience. Future "Hals" from 2001 a Space Odyssey are a target audience, and I think this book is a great contribution for computer understanding of human intelligence and how the brain works. br />

Saturday, October 20, 2012

Paging Dr. Watson

I did watch Watson defeat the best Jeopardy players in the world, when - last year or so - and of course as an eHealth student, I knew this would be a fantastic machine to program for medicine, in particular, diagnosis. I knew about other attempts at artificial intelligence for diagnosis like Isabel. Isabel is one of the leading "differential clinical decision support" tools for physicians. There were many early experiments in artificial intelligence for medicine, and I believe the editor our our Biomedical Informatics textbook, Edward H. Shortliffe, was also an early pioneer, as the chapter would attest. The writer on this article on "Paging Dr. Watson" mentions a book called "How Doctors Think". I read it, and it is excellent. Another book that is relevent is "Every Patient Tells a Story" by Lisa Sanders, who advised on the House TV series. One theme of the book is the loss of skill in the physical exam by physicians and the over reliance on technology for diagnosis. I am just saying.

Paging Dr. Watson: artificial intelligence as a prescription for health care

October 18, 2012

“It’s not humanly possible to practice the best possible medicine. We need machines,” said Herbert Chase, a professor of clinical medicine at Columbia University and member of IBM’s Watson Healthcare Advisory Board, Wired Science reports.

“A machine like [IBM's Watson], with massively parallel processing, is like 500,000 of me sitting at Google and Pubmed, trying to find the right information.”

Yet though Watson is clearly a powerful tool, doctors like physician Mark Graber, a former chief of the Veterans Administration hospital in Northport, New York, wonder if it’s the right tool. “Watson may solve the small fraction of cases where inadequate knowledge is the issue,” he said. “But medical school works. Doctors have enough knowledge. They struggle because they don’t have enough time, because they didn’t get a second opinion.”