Featured Post

Hacking Health in Hamilton Ontario - Let's hear that pitch!

What compelled me to register for a weekend Health Hackathon? Anyway, I could soon be up to my ears in it. A pubmed search on Health Hack...

Showing posts with label Life Extension. Show all posts

Showing posts with label Life Extension. Show all posts

Saturday, July 17, 2021

11 Year Old Boy Graduates University in Physics Plans Research on Immortality

An eleven year old boy, Laurent Simons, graduated with a degree in physics and plans out a way to create human immortality by replacing body parts with mechanical parts. I don't know how he gets there with a knowledge base in quantum physics, but anyway, kudoos for him for trying to dream big!

https://www.newsweek.com/laurent-simons-11-second-youngest-graduate-ever-plans-make-humans-immortal-1607168

"This is the first puzzle piece in my goal of replacing body parts with mechanical parts," Simons said.

"Immortality, that is my goal. I want to be able to replace as many body parts as possible with mechanical parts. I've mapped out a path to get there. You can see it as a big puzzle. Quantum physics—the study of the smallest particles—is the first piece of the puzzle," he said.

"Two things are important in such a study: acquiring knowledge and applying that knowledge. To achieve the second, I want to work with the best professors in the world, look inside their brains and find out how they think," Simons added.

Friday, January 13, 2017

Brilliant Article by Susan Schneider - It may not feel like anything to be an alien

http://www.kurzweilai.net/it-may-not-feel-like-anything-to-be-an-alien

This was one of most well written and interesting articles I read all year. You don't necessarily need to have seen the movie Arrival to appreciate it:

By Susan Schneider

By Susan Schneider

Humans are probably not the greatest intelligences in the universe. Earth is a relatively young planet and the oldest civilizations could be billions of years older than us. But even on Earth, Homo sapiens may not be the most intelligent species for that much longer.

The world Go, chess, and Jeopardy champions are now all AIs. AI is projected to outmode many human professions within the next few decades. And given the rapid pace of its development, AI may soon advance to artificial general intelligence—intelligence that, like human intelligence, can combine insights from different topic areas and display flexibility and common sense. From there it is a short leap to superintelligent AI, which is smarter than humans in every respect, even those that now seem firmly in the human domain, such as scientific reasoning and social skills. Each of us alive today may be one of the last rungs on the evolutionary ladder that leads from the first living cell to synthetic intelligence.

What we are only beginning to realize is that these two forms of superhuman intelligence—alien and artificial—may not be so distinct. The technological developments we are witnessing today may have all happened before, elsewhere in the universe. The transition from biological to synthetic intelligence may be a general pattern, instantiated over and over, throughout the cosmos. The universe’s greatest intelligences may be postbiological, having grown out of civilizations that were once biological. (This is a view I share with Paul Davies, Steven Dick, Martin Rees, and Seth Shostak, among others.) To judge from the human experience—the only example we have—the transition from biological to postbiological may take only a few hundred years.

I prefer the term “postbiological” to “artificial” because the contrast between biological and synthetic is not very sharp. Consider a biological mind that achieves superintelligence through purely biological enhancements, such as nanotechnologically enhanced neural minicolumns. This creature would be postbiological, although perhaps many wouldn’t call it an “AI.” Or consider a computronium that is built out of purely biological materials, like the Cylon Raider in the reimagined Battlestar Galactica TV series.

The key point is that there is no reason to expect humans to be the highest form of intelligence there is. Our brains evolved for specific environments and are greatly constrained by chemistry and historical contingencies. But technology has opened up a vast design space, offering new materials and modes of operation, as well as new ways to explore that space at a rate much faster than traditional biological evolution. And I think we already see reasons why synthetic intelligence will outperform us.

An extraterrestrial AI could have goals that conflict with those of biological life

Silicon microchips already seem to be a better medium for information processing than groups of neurons. Neurons reach a peak speed of about 200 hertz, compared to gigahertz for the transistors in current microprocessors. Although the human brain is still far more intelligent than a computer, machines have almost unlimited room for improvement. It may not be long before they can be engineered to match or even exceed the intelligence of the human brain through reverse-engineering the brain and improving upon its algorithms, or through some combination of reverse engineering and judicious algorithms that aren’t based on the workings of the human brain.

In addition, an AI can be downloaded to multiple locations at once, is easily backed up and modified, and can survive under conditions that biological life has trouble with, including interstellar travel. Our measly brains are limited by cranial volume and metabolism; superintelligent AI, in stark contrast, could extend its reach across the Internet and even set up a Galaxy-wide computronium, utilizing all the matter within our galaxy to maximize computations. There is simply no contest. Superintelligent AI would be far more durable than us.

Suppose I am right. Suppose that intelligent life out there is postbiological. What should we make of this? Here, current debates over AI on Earth are telling. Two of the main points of contention—the so-called control problem and the nature of subjective experience—affect our understanding of what other alien civilizations may be like, and what they may do to us when we finally meet.

Ray Kurzweil takes an optimistic view of the postbiological phase of evolution, suggesting that humanity will merge with machines, reaching a magnificent technotopia. But Stephen Hawking, Bill Gates, Elon Musk, and others have expressed the concern that humans could lose control of superintelligent AI, as it can rewrite its own programming and outthink any control measures that we build in. This has been called the “control problem”—the problem of how we can control an AI that is both inscrutable and vastly intellectually superior to us.

Superintelligent AI could be developed during a technological

singularity, an abrupt transition when ever-more-rapid technological

advances—especially an intelligence explosion—slip beyond our ability to

predict or understand. But even if such an intelligence arises in less

dramatic fashion, there may be no way for us to predict or control its

goals. Even if we could decide on what moral principles to build into

our machines, moral programming is difficult to specify in a foolproof

way, and such programming could be rewritten by a superintelligence in

any case. A clever machine could bypass safeguards, such as kill

switches, and could potentially pose an existential threat to biological

life. Millions of dollars are pouring into organizations devoted to AI

safety. Some of the finest minds in computer science are working on this

problem. They will hopefully create safe systems, but many worry that

the control problem is insurmountable.

In light of this, contact with an alien intelligence may be even more dangerous than we think. Biological aliens might well be hostile, but an extraterrestrial AI could pose an even greater risk. It may have goals that conflict with those of biological life, have at its disposal vastly superior intellectual abilities, and be far more durable than biological life.

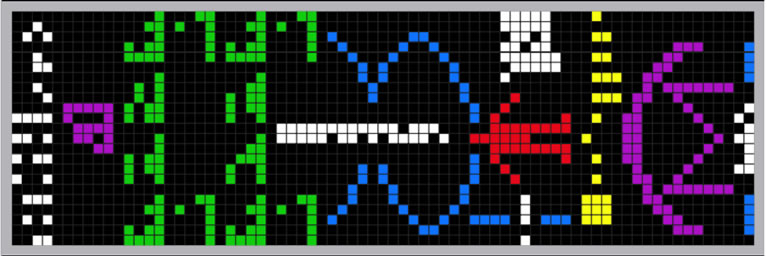

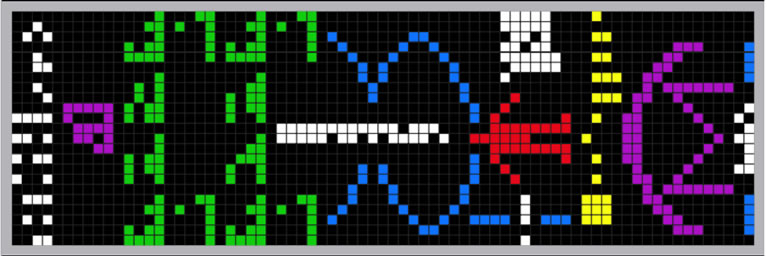

That argues for caution with so-called Active SETI, in which we do not just passively listen for signals from other civilizations, but deliberately advertise our presence. In the most famous example, in 1974 Frank Drake and Carl Sagan used the giant dish-telescope in Arecibo, Puerto Rico, to send a message to a star cluster. Advocates of Active SETI hold that, instead of just passively listening for signs of extraterrestrial intelligence, we should be using our most powerful radio transmitters, such as Arecibo, to send messages in the direction of the stars that are nearest to Earth.

Why would nonconscious machines have the same value we place on biological intelligence?

Such a program strikes me as reckless when one considers the control problem. Although a truly advanced civilization would likely have no interest in us, even one hostile civilization among millions could be catastrophic. Until we have reached the point at which we can be confident that superintelligent AI does not pose a threat to us, we should not call attention to ourselves. Advocates of Active SETI point out that our radar and radio signals are already detectable, but these signals are fairly weak and quickly blend with natural galactic noise. We would be playing with fire if we transmitted stronger signals that were intended to be heard.

The safest mindset is intellectual humility. Indeed, barring blaringly obvious scenarios in which alien ships hover over Earth, as in the recent film Arrival, I wonder if we could even recognize the technological markers of a truly advanced superintelligence. Some scientists project that superintelligent AIs could feed off black holes or create Dyson Spheres, megastructures that harnesses the energy of entire stars. But these are just speculations from the vantage point of our current technology; it’s simply the height of hubris to claim that we can foresee the computational abilities and energy needs of a civilization millions or even billions of years ahead of our own.

Some of the first superintelligent AIs could have cognitive systems that are roughly modeled after biological brains—the way, for instance, that deep learning systems are roughly modeled on the brain’s neural networks. Their computational structure might be comprehensible to us, at least in rough outlines. They may even retain goals that biological beings have, such as reproduction and survival.

But superintelligent AIs, being self-improving, could quickly morph into an unrecognizable form. Perhaps some will opt to retain cognitive features that are similar to those of the species they were originally modeled after, placing a design ceiling on their own cognitive architecture. Who knows? But without a ceiling, an alien superintelligence could quickly outpace our ability to make sense of its actions, or even look for it. Perhaps it would even blend in with natural features of the universe; perhaps it is in dark matter itself, as Caleb Scharf recently speculated.

An advocate of Active SETI will point out that this is precisely why

we should send signals into space—let them find us, and let them design

means of contact they judge to be tangible to an intellectually inferior

species like us. While I agree this is a reason to consider Active

SETI, the possibility of encountering a dangerous superintelligence

outweighs it. For all we know, malicious superintelligences could infect

planetary AI systems with viruses, and wise civilizations build

cloaking devices. We humans may need to reach our own singularity before

embarking upon Active SETI. Our own superintelligent AIs will be able

to inform us of the prospects for galactic AI safety and how we would go

about recognizing signs of superintelligence elsewhere in the universe.

It takes one to know one.

It is natural to wonder whether all this means that humans should avoid developing sophisticated AI for space exploration; after all, recall the iconic HAL in 2001: A Space Odyssey. Considering a future ban on AI in space would be premature, I believe. By the time humanity is able to investigate the universe with its own AIs, we humans will likely have reached a tipping point. We will have either already lost control of AI—in which case space projects initiated by humans will not even happen—or achieved a firmer grip on AI safety. Time will tell.

Raw intelligence is not the only issue to worry about. Normally, we expect that if we encountered advanced alien intelligence, we would likely encounter creatures with very different biologies, but they would still have minds like ours in an important sense—there would be something it is like, from the inside, to be them. Consider that every moment of your waking life, and whenever you are dreaming, it feels like something to be you. When you see the warm hues of a sunrise, or smell the aroma of freshly baked bread, you are having conscious experience. Likewise, there is also something that it is like to be an alien—or so we commonly assume. That assumption needs to be questioned though. Would superintelligent AIs even have conscious experience and, if they did, could we tell? And how would their inner lives, or lack thereof, impact us?

The question of whether AIs have an inner life is key to how we value their existence. Consciousness is the philosophical cornerstone of our moral systems, being key to our judgment of whether someone or something is a self or person rather than a mere automaton. And conversely, whether they are conscious may also be key to how they value us. The value an AI places on us may well hinge on whether it has an inner life; using its own subjective experience as a springboard, it could recognize in us the capacity for conscious experience. After all, to the extent we value the lives of other species, we value them because we feel an affinity of consciousness—thus most of us recoil from killing a chimp, but not from munching on an apple.

But how can beings with vast intellectual differences and that are made of different substrates recognize consciousness in each other? Philosophers on Earth have pondered whether consciousness is limited to biological phenomena. Superintelligent AI, should it ever wax philosophical, could similarly pose a “problem of biological consciousness” about us, asking whether we have the right stuff for experience.

Who knows what intellectual path a superintelligence would take to tell whether we are conscious. But for our part, how can we humans tell whether an AI is conscious? Unfortunately, this will be difficult. Right now, you can tell you are having experience, as it feels like something to be you. You are your own paradigm case of conscious experience. And you believe that other people and certain nonhuman animals are likely conscious, for they are neurophysiologically similar to you. But how are you supposed to tell whether something made of a different substrate can have experience?

Consider, for instance, a silicon-based superintelligence. Although

both silicon microchips and neural minicolumns process information, for

all we now know they could differ molecularly in ways that impact

consciousness. After all, we suspect that carbon is chemically more

suitable to complex life than silicon is. If the chemical differences

between silicon and carbon impact something as important as life itself,

we should not rule out the possibility that the chemical differences

also impact other key functions, such as whether silicon gives rise to

consciousness.

Consider, for instance, a silicon-based superintelligence. Although

both silicon microchips and neural minicolumns process information, for

all we now know they could differ molecularly in ways that impact

consciousness. After all, we suspect that carbon is chemically more

suitable to complex life than silicon is. If the chemical differences

between silicon and carbon impact something as important as life itself,

we should not rule out the possibility that the chemical differences

also impact other key functions, such as whether silicon gives rise to

consciousness.

The conditions required for consciousness are actively debated by AI researchers, neuroscientists, and philosophers of mind. Resolving them might require an empirical approach that is informed by philosophy—a means of determining, on a case-by-case basis, whether an information-processing system supports consciousness, and under what conditions.

Here’s a suggestion, a way we can at least enhance our understanding of whether silicon supports consciousness. Silicon-based brain chips are already under development as a treatment for various memory-related conditions, such as Alzheimer’s and post-traumatic stress disorder. If, at some point, chips are used in areas of the brain responsible for conscious functions, such as attention and working memory, we could begin to understand whether silicon is a substrate for consciousness. We might find that replacing a brain region with a chip causes a loss of certain experience, like the episodes that Oliver Sacks wrote about. Chip engineers could then try a different, non-neural, substrate, but they may eventually find that the only “chip” that works is one that is engineered from biological neurons. This procedure would serve as a means of determining whether artificial systems can be conscious, at least when they are placed in a larger system that we already believe is conscious.

Even if silicon can give rise to consciousness, it might do so only in very specific circumstances; the properties that give rise to sophisticated information processing (and which AI developers care about) may not be the same properties that yield consciousness. Consciousness may require consciousness engineering—a deliberate engineering effort to put consciousness in machines.

Here’s my worry. Who, on Earth or on distant planets, would aim to engineer consciousness into AI systems themselves? Indeed, when I think of existing AI programs on Earth, I can see certain reasons why AI engineers might actively avoid creating conscious machines.

Robots are currently being designed to take care of the elderly in Japan, clean up nuclear reactors, and fight our wars. Naturally, the question has arisen: Is it ethical to use robots for such tasks if they turn out to be conscious? How would that differ from breeding humans for these tasks? If I were an AI director at Google or Facebook, thinking of future projects, I wouldn’t want the ethical muddle of inadvertently designing a sentient system. Developing a system that turns out to be sentient could lead to accusations of robot slavery and other public-relations nightmares, and it could even lead to a ban on the use of AI technology in the very areas the AI was designed to be used in. A natural response to this is to seek architectures and substrates in which robots are not conscious.

Further, it may be more efficient for a self-improving superintelligence to eliminate consciousness. Think about how consciousness works in the human case. Only a small percentage of human mental processing is accessible to the conscious mind. Consciousness is correlated with novel learning tasks that require attention and focus. A superintelligence would possess expert-level knowledge in every domain, with rapid-fire computations ranging over vast databases that could include the entire Internet and ultimately encompass an entire galaxy. What would be novel to it? What would require slow, deliberative focus? Wouldn’t it have mastered everything already? Like an experienced driver on a familiar road, it could rely on nonconscious processing. The simple consideration of efficiency suggests, depressingly, that the most intelligent systems will not be conscious. On cosmological scales, consciousness may be a blip, a momentary flowering of experience before the universe reverts to mindlessness.

If people suspect that AI isn’t conscious, they will likely view the suggestion that intelligence tends to become postbiological with dismay. And it heightens our existential worries. Why would nonconscious machines have the same value we place on biological intelligence, which is conscious?

Soon, humans will no longer be the measure of intelligence on Earth. And perhaps already, elsewhere in the cosmos, superintelligent AI, not biological life, has reached the highest intellectual plateaus. But perhaps biological life is distinctive in another significant respect—conscious experience. For all we know, sentient AI will require a deliberate engineering effort by a benevolent species, seeking to create machines that feel. Perhaps a benevolent species will see fit to create their own AI mindchildren. Or perhaps future humans will engage in some consciousness engineering, and send sentience to the stars.

SUSAN SCHNEIDER is an associate professor of philosophy and cognitive science at the University of Connecticut and an affiliated faculty member at the Institute for Advanced Study, the Center of Theological Inquiry, YHouse, and the Ethics and Technology Group at Yale’s Interdisciplinary Center of Bioethics. She has written several books, including Science Fiction and Philosophy: From Time Travel to Superintelligence. For more information, visit SchneiderWebsite.com.

This was one of most well written and interesting articles I read all year. You don't necessarily need to have seen the movie Arrival to appreciate it:

It may not feel like anything to be an alien

December 23, 2016

An alien message in Arrival movie (dredit: Paramount Pictures)

Humans are probably not the greatest intelligences in the universe. Earth is a relatively young planet and the oldest civilizations could be billions of years older than us. But even on Earth, Homo sapiens may not be the most intelligent species for that much longer.

The world Go, chess, and Jeopardy champions are now all AIs. AI is projected to outmode many human professions within the next few decades. And given the rapid pace of its development, AI may soon advance to artificial general intelligence—intelligence that, like human intelligence, can combine insights from different topic areas and display flexibility and common sense. From there it is a short leap to superintelligent AI, which is smarter than humans in every respect, even those that now seem firmly in the human domain, such as scientific reasoning and social skills. Each of us alive today may be one of the last rungs on the evolutionary ladder that leads from the first living cell to synthetic intelligence.

What we are only beginning to realize is that these two forms of superhuman intelligence—alien and artificial—may not be so distinct. The technological developments we are witnessing today may have all happened before, elsewhere in the universe. The transition from biological to synthetic intelligence may be a general pattern, instantiated over and over, throughout the cosmos. The universe’s greatest intelligences may be postbiological, having grown out of civilizations that were once biological. (This is a view I share with Paul Davies, Steven Dick, Martin Rees, and Seth Shostak, among others.) To judge from the human experience—the only example we have—the transition from biological to postbiological may take only a few hundred years.

I prefer the term “postbiological” to “artificial” because the contrast between biological and synthetic is not very sharp. Consider a biological mind that achieves superintelligence through purely biological enhancements, such as nanotechnologically enhanced neural minicolumns. This creature would be postbiological, although perhaps many wouldn’t call it an “AI.” Or consider a computronium that is built out of purely biological materials, like the Cylon Raider in the reimagined Battlestar Galactica TV series.

The key point is that there is no reason to expect humans to be the highest form of intelligence there is. Our brains evolved for specific environments and are greatly constrained by chemistry and historical contingencies. But technology has opened up a vast design space, offering new materials and modes of operation, as well as new ways to explore that space at a rate much faster than traditional biological evolution. And I think we already see reasons why synthetic intelligence will outperform us.

An extraterrestrial AI could have goals that conflict with those of biological life

Silicon microchips already seem to be a better medium for information processing than groups of neurons. Neurons reach a peak speed of about 200 hertz, compared to gigahertz for the transistors in current microprocessors. Although the human brain is still far more intelligent than a computer, machines have almost unlimited room for improvement. It may not be long before they can be engineered to match or even exceed the intelligence of the human brain through reverse-engineering the brain and improving upon its algorithms, or through some combination of reverse engineering and judicious algorithms that aren’t based on the workings of the human brain.

In addition, an AI can be downloaded to multiple locations at once, is easily backed up and modified, and can survive under conditions that biological life has trouble with, including interstellar travel. Our measly brains are limited by cranial volume and metabolism; superintelligent AI, in stark contrast, could extend its reach across the Internet and even set up a Galaxy-wide computronium, utilizing all the matter within our galaxy to maximize computations. There is simply no contest. Superintelligent AI would be far more durable than us.

Suppose I am right. Suppose that intelligent life out there is postbiological. What should we make of this? Here, current debates over AI on Earth are telling. Two of the main points of contention—the so-called control problem and the nature of subjective experience—affect our understanding of what other alien civilizations may be like, and what they may do to us when we finally meet.

Ray Kurzweil takes an optimistic view of the postbiological phase of evolution, suggesting that humanity will merge with machines, reaching a magnificent technotopia. But Stephen Hawking, Bill Gates, Elon Musk, and others have expressed the concern that humans could lose control of superintelligent AI, as it can rewrite its own programming and outthink any control measures that we build in. This has been called the “control problem”—the problem of how we can control an AI that is both inscrutable and vastly intellectually superior to us.

“I’m

sorry, Dave” — HAL in 2001: A Space Odyssey. If you think intelligent

machines are dangerous, imagine what intelligent extraterrestrial

machines could do. (credit: YouTube/Warner Bros.)

In light of this, contact with an alien intelligence may be even more dangerous than we think. Biological aliens might well be hostile, but an extraterrestrial AI could pose an even greater risk. It may have goals that conflict with those of biological life, have at its disposal vastly superior intellectual abilities, and be far more durable than biological life.

That argues for caution with so-called Active SETI, in which we do not just passively listen for signals from other civilizations, but deliberately advertise our presence. In the most famous example, in 1974 Frank Drake and Carl Sagan used the giant dish-telescope in Arecibo, Puerto Rico, to send a message to a star cluster. Advocates of Active SETI hold that, instead of just passively listening for signs of extraterrestrial intelligence, we should be using our most powerful radio transmitters, such as Arecibo, to send messages in the direction of the stars that are nearest to Earth.

Why would nonconscious machines have the same value we place on biological intelligence?

Such a program strikes me as reckless when one considers the control problem. Although a truly advanced civilization would likely have no interest in us, even one hostile civilization among millions could be catastrophic. Until we have reached the point at which we can be confident that superintelligent AI does not pose a threat to us, we should not call attention to ourselves. Advocates of Active SETI point out that our radar and radio signals are already detectable, but these signals are fairly weak and quickly blend with natural galactic noise. We would be playing with fire if we transmitted stronger signals that were intended to be heard.

The safest mindset is intellectual humility. Indeed, barring blaringly obvious scenarios in which alien ships hover over Earth, as in the recent film Arrival, I wonder if we could even recognize the technological markers of a truly advanced superintelligence. Some scientists project that superintelligent AIs could feed off black holes or create Dyson Spheres, megastructures that harnesses the energy of entire stars. But these are just speculations from the vantage point of our current technology; it’s simply the height of hubris to claim that we can foresee the computational abilities and energy needs of a civilization millions or even billions of years ahead of our own.

Some of the first superintelligent AIs could have cognitive systems that are roughly modeled after biological brains—the way, for instance, that deep learning systems are roughly modeled on the brain’s neural networks. Their computational structure might be comprehensible to us, at least in rough outlines. They may even retain goals that biological beings have, such as reproduction and survival.

But superintelligent AIs, being self-improving, could quickly morph into an unrecognizable form. Perhaps some will opt to retain cognitive features that are similar to those of the species they were originally modeled after, placing a design ceiling on their own cognitive architecture. Who knows? But without a ceiling, an alien superintelligence could quickly outpace our ability to make sense of its actions, or even look for it. Perhaps it would even blend in with natural features of the universe; perhaps it is in dark matter itself, as Caleb Scharf recently speculated.

The Arecibo message was broadcast into space a single time, for 3 minutes, in November 1974 (credit: SETI Institute)

It is natural to wonder whether all this means that humans should avoid developing sophisticated AI for space exploration; after all, recall the iconic HAL in 2001: A Space Odyssey. Considering a future ban on AI in space would be premature, I believe. By the time humanity is able to investigate the universe with its own AIs, we humans will likely have reached a tipping point. We will have either already lost control of AI—in which case space projects initiated by humans will not even happen—or achieved a firmer grip on AI safety. Time will tell.

Raw intelligence is not the only issue to worry about. Normally, we expect that if we encountered advanced alien intelligence, we would likely encounter creatures with very different biologies, but they would still have minds like ours in an important sense—there would be something it is like, from the inside, to be them. Consider that every moment of your waking life, and whenever you are dreaming, it feels like something to be you. When you see the warm hues of a sunrise, or smell the aroma of freshly baked bread, you are having conscious experience. Likewise, there is also something that it is like to be an alien—or so we commonly assume. That assumption needs to be questioned though. Would superintelligent AIs even have conscious experience and, if they did, could we tell? And how would their inner lives, or lack thereof, impact us?

The question of whether AIs have an inner life is key to how we value their existence. Consciousness is the philosophical cornerstone of our moral systems, being key to our judgment of whether someone or something is a self or person rather than a mere automaton. And conversely, whether they are conscious may also be key to how they value us. The value an AI places on us may well hinge on whether it has an inner life; using its own subjective experience as a springboard, it could recognize in us the capacity for conscious experience. After all, to the extent we value the lives of other species, we value them because we feel an affinity of consciousness—thus most of us recoil from killing a chimp, but not from munching on an apple.

But how can beings with vast intellectual differences and that are made of different substrates recognize consciousness in each other? Philosophers on Earth have pondered whether consciousness is limited to biological phenomena. Superintelligent AI, should it ever wax philosophical, could similarly pose a “problem of biological consciousness” about us, asking whether we have the right stuff for experience.

Who knows what intellectual path a superintelligence would take to tell whether we are conscious. But for our part, how can we humans tell whether an AI is conscious? Unfortunately, this will be difficult. Right now, you can tell you are having experience, as it feels like something to be you. You are your own paradigm case of conscious experience. And you believe that other people and certain nonhuman animals are likely conscious, for they are neurophysiologically similar to you. But how are you supposed to tell whether something made of a different substrate can have experience?

Westworld (credit: HBO)

The conditions required for consciousness are actively debated by AI researchers, neuroscientists, and philosophers of mind. Resolving them might require an empirical approach that is informed by philosophy—a means of determining, on a case-by-case basis, whether an information-processing system supports consciousness, and under what conditions.

Here’s a suggestion, a way we can at least enhance our understanding of whether silicon supports consciousness. Silicon-based brain chips are already under development as a treatment for various memory-related conditions, such as Alzheimer’s and post-traumatic stress disorder. If, at some point, chips are used in areas of the brain responsible for conscious functions, such as attention and working memory, we could begin to understand whether silicon is a substrate for consciousness. We might find that replacing a brain region with a chip causes a loss of certain experience, like the episodes that Oliver Sacks wrote about. Chip engineers could then try a different, non-neural, substrate, but they may eventually find that the only “chip” that works is one that is engineered from biological neurons. This procedure would serve as a means of determining whether artificial systems can be conscious, at least when they are placed in a larger system that we already believe is conscious.

Even if silicon can give rise to consciousness, it might do so only in very specific circumstances; the properties that give rise to sophisticated information processing (and which AI developers care about) may not be the same properties that yield consciousness. Consciousness may require consciousness engineering—a deliberate engineering effort to put consciousness in machines.

Here’s my worry. Who, on Earth or on distant planets, would aim to engineer consciousness into AI systems themselves? Indeed, when I think of existing AI programs on Earth, I can see certain reasons why AI engineers might actively avoid creating conscious machines.

Robots are currently being designed to take care of the elderly in Japan, clean up nuclear reactors, and fight our wars. Naturally, the question has arisen: Is it ethical to use robots for such tasks if they turn out to be conscious? How would that differ from breeding humans for these tasks? If I were an AI director at Google or Facebook, thinking of future projects, I wouldn’t want the ethical muddle of inadvertently designing a sentient system. Developing a system that turns out to be sentient could lead to accusations of robot slavery and other public-relations nightmares, and it could even lead to a ban on the use of AI technology in the very areas the AI was designed to be used in. A natural response to this is to seek architectures and substrates in which robots are not conscious.

Further, it may be more efficient for a self-improving superintelligence to eliminate consciousness. Think about how consciousness works in the human case. Only a small percentage of human mental processing is accessible to the conscious mind. Consciousness is correlated with novel learning tasks that require attention and focus. A superintelligence would possess expert-level knowledge in every domain, with rapid-fire computations ranging over vast databases that could include the entire Internet and ultimately encompass an entire galaxy. What would be novel to it? What would require slow, deliberative focus? Wouldn’t it have mastered everything already? Like an experienced driver on a familiar road, it could rely on nonconscious processing. The simple consideration of efficiency suggests, depressingly, that the most intelligent systems will not be conscious. On cosmological scales, consciousness may be a blip, a momentary flowering of experience before the universe reverts to mindlessness.

If people suspect that AI isn’t conscious, they will likely view the suggestion that intelligence tends to become postbiological with dismay. And it heightens our existential worries. Why would nonconscious machines have the same value we place on biological intelligence, which is conscious?

Soon, humans will no longer be the measure of intelligence on Earth. And perhaps already, elsewhere in the cosmos, superintelligent AI, not biological life, has reached the highest intellectual plateaus. But perhaps biological life is distinctive in another significant respect—conscious experience. For all we know, sentient AI will require a deliberate engineering effort by a benevolent species, seeking to create machines that feel. Perhaps a benevolent species will see fit to create their own AI mindchildren. Or perhaps future humans will engage in some consciousness engineering, and send sentience to the stars.

SUSAN SCHNEIDER is an associate professor of philosophy and cognitive science at the University of Connecticut and an affiliated faculty member at the Institute for Advanced Study, the Center of Theological Inquiry, YHouse, and the Ethics and Technology Group at Yale’s Interdisciplinary Center of Bioethics. She has written several books, including Science Fiction and Philosophy: From Time Travel to Superintelligence. For more information, visit SchneiderWebsite.com.

Sunday, December 1, 2013

A digital umbilical cord for life extension

A project at Lifenaut aims to create a digital image vault of your life history where avatars of the future will live forever. At least, that is my interpretation. It reminded me of a project Ray Kuzweil has to bring his father back to life. He has a store room full of bankers boxes of information about him. Probably that is all digitized now. This is not an actual eHealth application like the Virtual Self, which can be used for diagnostic simulations.

Create a Mind File

How it Works

How it Works

Upload biographical pictures, videos, and documents to a digital archive that will be preserved for generations.

Organize through geo mapping, timelines, and tagging, a rich portrait of information about you. The places you’ve been and the people you’ve met can be stored.

Create a computer-based avatar to interact and respond with your attitudes, values, mannerisms and beliefs.

Connect with other people who are interested in exploring the future of technology and how it can enhance the quality of our lives.

Thursday, June 6, 2013

IEEE conference in Toronto: Theme - SmartWorld

If I find the pocket change for registration - I am there in a heartbeat. Two panelists or speakers of interest to eHealth students are Dr. Ann Cavoukian, Privacy Commissioner of Ontario, and Dr. Alex Jadad, who is founder for the Centre for Globale eHealth Innovation lab at the University of Toronto. Having Ray Kuzweil, Steve Mann, Marvin Minsky, et al there is just "icing on the cake".

Website for IEEE ISTAS'13: http://veillance.me

Website for IEEE ISTAS'13: http://veillance.me

Theme - "Smartworld"

Living in a Smart World - People as Sensors

ISTAS'13 presenters and panellists will address the implications of living in smartworlds - smart grids, smart infrastructure, smart homes, smart cars, smart fridges, and with the advent of body-worn sensors like cameras, smart people.

The environment around us is becoming "smarter". Soon there will be a camera in nearly every streetlight enabling better occupancy sensing, while many appliances and everyday products such as automatic flush toilets, and faucets are starting to use more sophisticated camera-based computer-vision technologies. Meanwhile, what happens when people increasingly wear these same sensors?

A smart world where people wear sensors such as cameras, physiological sensors (e.g. monitoring temperature, physiological characteristics), location data loggers, tokens, and other wearable and embeddable systems presents many direct benefits, especially for personal applications. However, these same "Wearable Computing" technologies and applications have the potential to become mechanisms of control by smart infrastructure monitoring those individuals that wear these sensors.

There are great socio-ethical implications that will stem from these technologies and fresh regulatory and legislative approaches are required to deal with this new environment.

This event promises to be the beginning of outcomes related to:

- Consumer awareness

- Usability

- A defined industry cluster of new innovators

- Regulatory demands for a variety of jurisdictions

- User-centric engineering development ideas

- Augmented Reality design

- Creative computing

- Mobile learning applications

- Wearables as an assistive technology

"Smart people" interacting with smart infrastructure means that intelligence is driving decisions. In essence, technology becomes society.

Professor Mann University of Toronto will be speaking in the opening keynote panel with acclaimed Professor of MIT Media Arts and Sciences, Marvin Minsky who wrote the groundbreaking book The Society of Mind and has helped define the field of Artificial Intelligence (AI) among his major contributions.

General Chair of ISTAS13 and formerly a member of the MIT Media Lab under the guidance of Nicholas Negroponte in the 1990s Mann is long considered to be the Father of Wearable Computing and AR in this young field.

Saturday, March 23, 2013

Interfaith Dialogue on Avatar Immortality

The Avatar project created by Russian Billionaire Dmitry Itskov has a remarkable website, including this Interfaith Dialogue on the spiritual future of humanity as it approaches the technological ability to:

- A robotic copy of a human body remotely controlled by BCI - 2015 - 2020

- An Avatar in which a human brain is transplanted at the end of one's life - 2020 - 2025

- An Avatar with an artificial brain in which a human personality is transplanted at the end of one's life - 2030 - 2035

- A hologram like avatar - 2040 - 2045

There is a lot of heavy weight endorsement for this project if you look at the list of names in their letter to UN Secretary-General Ban Ki-Moon . There are a lot more names from the religious traditions on that letter as well, than are included in this video of interfaith dialogue. One of the names I had to look up was Dr. James Martin — "British author and entrepreneur and the largest individual benefactor to the University of Oxford in its 900-year history". I probably should have heard of him, A) because I have worked with computer information technology for 30 years, and B) because I work in a university where large donations by benefactors is the essential component for driving research and keeping university infrastructure and education alive. I read the biography of Dr. William Osler several times, the masterful version written by Michael Bliss. When Osler was enticed to go to Johns Hopkins, one of the first hospitals combined with a teaching university level medical school, it was just an architectural blue print at the time. But it owed it's existence to visionary philanthropic benefactors and it was a turning point in philanthropic largesse. Millionaires were starting to seek immortality for their names by given money to universities instead of churches, except for eccentrics like Carnegie, who thought building free libraries and educating the massess was more worthy of the life energy contained in his horde of lucre. It now seems like giving money to immortality projects is the ultimate form of philanthropic immortality.

Sunday, March 17, 2013

The Transhumanist Reader: Classical and Contemporary Essays

This was from the mailing list of the Institute for Ethics and Emerging Technology. Quite a frightening list of chapter titles when I first read it. The future will be stranger than we think, but now that I think about it, maybe the future will just be normal, because it seems to be kind of normal now. Isn't that strange?: http://ieet.org/

NEW BOOKS BY IEETers

The Transhumanist Reader: Classical and Contemporary Essays (2013)

by eds. Max More and Natasha Vita-More

Table of Contents

Part I Roots and Core Themes

1 The Philosophy of Transhumanism, Max More

2 Aesthetics: Bringing the Arts & Design into the Discussion of Transhumanism, Natasha Vita-More* 3 Why I Want to be a Posthuman When I Grow Up, Nick Bostrom* 4 Transhumanist Declaration (2012), Various 5 Morphological Freedom – Why We Not Just Want It, but Need It, Anders Sandberg

Part II Human Enhancement: The Somatic Sphere

6 Welcome to the Future of Medicine, Robert A. Freitas Jr.

7 Life Expansion Media, Natasha Vita-More* 8 The Hybronaut Affair: A Ménage of Art, Technology, and Science, Laura Beloff 9 Transavatars, William Sims Bainbridge* 10 Alternative Biologies, Rachel Armstrong

Part III Human Enhancement: The Cognitive Sphere

11 Re-Inventing Ourselves: The Plasticity of Embodiment, Sensing, and Mind, Andy Clark

12 Artificial General Intelligence and the Future of Humanity, Ben Goertzel* 13 Intelligent Information Filters and Enhanced Reality, Alexander “Sasha” Chislenko 14 Uploading to Substrate-Independent Minds, Randal A. Koene 15 Uploading, Ralph C. Merkle

Part IV Core Technologies

16 Why Freud Was the First Good AI Theorist, Marvin Minsky

17 Pigs in Cyberspace, Hans Moravec 18 Nanocomputers, J. Storrs Hall 19 Immortalist Fictions and Strategies, Michael R. Rose 20 Dialogue between Ray Kurzweil and Eric Drexler

Part V Engines of Life: Identity and Beyond Death

21 The Curate’s Egg of Anti-Anti-Aging Bioethics, Aubrey de Grey*

22 Medical Time Travel, Brian Wowk 23 Transhumanism and Personal Identity,James Hughes* 24 Transcendent Engineering, Giulio Prisco*

Part VI Enhanced Decision-Making

25 Idea Futures: Encouraging an Honest Consensus, Robin Hanson

26 The Proactionary Principle: Optimizing Technological Outcomes, Max More 27 The Open Society and Its Media, Mark S. Miller, with E. Dean Tribble, Ravi Pandya, and Marc Stiegler

Part VII Biopolitics and Policy

28 Performance Enhancement and Legal Theory: An Interview with Professor Michael H. Shapiro

29 Justifying Human Enhancement: The Accumulation of Biocultural Capital, Andy Miah* 30 The Battle for the Future, Gregory Stock 31 Mind is Deeper Than Matter: Transgenderism, Transhumanism, and the Freedom of Form, Martine Rothblatt* 32 For Enhancing People, Ronald Bailey 33 Is Enhancement Worthy of Being a Right?,Patrick D. Hopkins* 34 Freedom by Design: Transhumanist Values and Cognitive Liberty, Wrye Sententia*

Part VIII Future Trajectories: Singularity

35 Technological Singularity, Vernor Vinge

36 An Overview of Models of Technological Singularity, Anders Sandberg 37 A Critical Discussion of Vinge’s Singularity Concept, David Brin*, Damien Broderick, Nick Bostrom, Alexander “Sasha” Chislenko, Robin Hanson, Max More, Michael Nielsen, and Anders Sandberg

Part IX The World’s Most Dangerous Idea

38 The Great Transition: Ideas and Anxieties,Russell Blackford*

39 Trans and Post, Damien Broderick 40 Back to Nature II: Art and Technology in the Twenty-First Century, Roy Ascott 41 A Letter to Mother Nature, Max More 42 Progress and Relinquishment, Ray Kurzweil

*IEET Fellow, Scholar or Staff

|

The Nano Revolution: More than Human

CBC -The Nature of Things with David Suzuki - - The Nano Revolution: More than Human

This video apparently is only viewable in Canada. It is an excellent view of the future of nano technology at the "point of care" in medicine. It also has an excellent scenario of "post humans". It is worth watching just for the computer graphics effects on nanotechnology models.

This video apparently is only viewable in Canada. It is an excellent view of the future of nano technology at the "point of care" in medicine. It also has an excellent scenario of "post humans". It is worth watching just for the computer graphics effects on nanotechnology models.

Monday, January 28, 2013

I am of the nature to grow old

A Buddhist friend and teacher reminded me in an email that:

"I am of the nature to grow old" and an ancient teaching called Upajjhatthan Sutta. And just the other day I was reading another posting someone quoting Ray Kurzweil saying “If you remain in good health for 20 more years, you may never die.” ( I guess he was referring to Boomers, and if you don't know who they are - God bless you) you might live forever. Or at least you will have a good chance of enjoying the life extension technologies which surely are at this very moment in the process of being exponentially developed.

The Buddha advised: "These are the five facts that one should reflect

on often, whether one is a woman or a man, lay or ordained."[4]

"I am of the nature to grow old" and an ancient teaching called Upajjhatthan Sutta. And just the other day I was reading another posting someone quoting Ray Kurzweil saying “If you remain in good health for 20 more years, you may never die.” ( I guess he was referring to Boomers, and if you don't know who they are - God bless you) you might live forever. Or at least you will have a good chance of enjoying the life extension technologies which surely are at this very moment in the process of being exponentially developed.

Five remembrances

Below are two English translations and the original Pali text of the "five remembrances":| 1. | I am sure to become old; I cannot avoid ageing. | I am subject to aging, have not gone beyond aging. | Jarādhammomhi jaraṃ anatīto.... |

| 2. | I am sure to become ill; I cannot avoid illness. | I am subject to illness, have not gone beyond illness. | Vyādhidhammomhi vyādhiṃ anatīto.... |

| 3. | I am sure to die; I cannot avoid death. | I am subject to death, have not gone beyond death. | Maraṇadhammomhi maraṇaṃ anatīto.... |

| 4. | I must be separated and parted from all that is dear and beloved to me. | I will grow different, separate from all that is dear and appealing to me. | Sabbehi me piyehi manāpehi nānābhāvo vinābhāvo.... |

| 5. | I am the owner of my actions, heir of my actions, actions are the womb (from which I have sprung), actions are my relations, actions are my protection. Whatever actions I do, good or bad, of these I shall become their heir.[1] | I am the owner of my actions, heir to my actions, born of my actions, related through my actions, and have my actions as my arbitrator. Whatever I do, for good or for evil, to that will I fall heir.[2] | Kammassakomhi kammadāyādo kammayoni kammabandhū kammapaṭisaraṇo yaṃ kammaṃ karissāmi kalyāṇaṃ vā pāpakaṃ vā tassa dāyādo bhavissāmī....[3] |

Thursday, December 13, 2012

#Cmdr_Hadfield - tweets from the space station

As of today, Commander Hadfield is still in quarantine waiting for the Soyuz blast to take him to the International Space Station, where he will stay almost half a year. I am going to try and follow his twitter account. He had an interesting post today pointing to a medical article that talks about how life in space is not good for life extension - it can be compared to the most sedentary sofa bound earth lifestyle! I would like to learn more about telehealth precautions they might have planned.

Tom Blackwell | Dec 12, 2012 9:24 PM ET | Last Updated: Dec 12, 2012 10:48 PM ET

More from Tom Blackwell | @tomblackwellNP

More from Tom Blackwell | @tomblackwellNP

NASA / Getty ImagesNASA astronaut Garrett Reisman, STS-132 mission specialist, participates in the mission's first session of extravehicular activity (EVA) as construction and maintenance continue on the International Space Station.

It seems astronauts hovering in weightless environments and earthlings reclining in front of the TV share a surprising trait: both avoid the effects of gravity — and both age rapidly as a result.

Now a unique joint venture between Canada’s health-research and space agencies is investigating the parallels between space flight and terrestrial aging, hoping to find ways to prevent the ill effects of each.

Astronauts and inactive older people suffer similar bone loss, muscle atrophy, blood-vessel changes and even fainting spells, say scientists, and their respective conditions can provide lessons for both domains.

Space flight is the ultimate in sedentary lifestyle

“To me, there really are a lot of overlaps,” said Richard Hughson, a University of Waterloo expert on vascular aging and brain health. “Space flight is the ultimate in sedentary lifestyle. When you’re up in space, you’re floating around, when you want to move a heavy object, you just give it a little push and away it goes.”

Billed as the first formal collaboration of its kind in the world, the project of the Canadian Space Agency and the Canadian Institutes for Health Research hosted a workshop for domestics scientists, doctors and business people in Ottawa earlier this year, and plans a broader international conference in 2013.

Typically in top physical shape, astronauts would seem on the surface to have little in common with seniors, especially those with particularly inactive lifestyles. Yet development of human bodies depends greatly on mechanical forces at play when people walk, lift things and otherwise move the weight of their own bodies or other objects against the ubiquitous pull of gravity.

iPhone EKG Case - Another piece in the Tricorder XPrize?

I thought the iStethoscope was a pretty good missing piece for the Qualcomm TriCorder XPrize. I blogged about this before < here >. Here is another component which fits nicely. It won't be long before a powerful point of care diagnostic smart phone finds it way to FDA approval - and an XPrize winner.

This $200 iPhone Case Is An FDA-Approved EKG Machine

HEALTH CARE IS HURTING, AND THE WORLD IS CHANGING. MORE AND MORE, HOSPITALS WILL FIT IN OUR POCKETS.

Most iPhone cases just protect your phone from drops. If you’re getting fancy, it may have a fisheye camera lens or a screen-printed back. But what about diagnosing coronary heart disease, arrhythmia, or congenital heart defects? The AliveCor Heart Monitor is an FDA-approved iPhone case that can be held in your hands (or dramatically pressed against your chest) to produce an EKG/ECG--the infamous green blips pulsing patient-side in hospitals everywhere.

“We think that EKG screening can be as approachable as taking blood pressure,” AliveCor President and CEO Judy Wade tells Co.Design.

There are already apps that take your heartbeat, of course. But there’s a big difference between the fast-paced standards of casual electronics and the strict sanctions of government-approved medical devices. “The heartbeat camera apps are good at wellness,” Wade admits, “but we see ourselves for use by people who want clinical-quality equipment.”

So unlike most iPhone cases that are squirted by Chinese factories at extremely high margins, AliveCor’s case has been in serious development since 2010. Aside from building the gadget itself, to become approved for medical use by the FDA, AliveCor had to participate in two clinical trials to field test both the hardware and the accompanying app. One study investigated how its single-lead EKG compared to a traditional 12-lead device, the other examined if 54 participants could figure out how to use the case properly, with no previous medical training. The latter study was not only successful but led to the diagnosis of two serious heart problems.

THE COMPLICATIONS OF INNOVATING UNDER THE FDA

AliveCor was lucky. Though it took about six months to get the application ready, the approval arrived well within the 90-day approval window, allowing the company to come to market sooner. It was a necessary hassle; FDA approval opens a lot of doors. Instantly, what could be considered some scam iPhone case was marketable to health care professionals--doctors--who’d most likely pay out of pocket for a $200 stethoscope replacement without blinking. FDA approval also allows doctors to prescribe, and potentially have insurance cover, AliveCor’s device for their patients to take home.

But even with an approval in-hand, AliveCor will continue to juggle complicated regulations to stay competitive in the market. For one, the approved monitor was designed for the iPhone 4 and 4S. Before AliveCor can release an iPhone 5 version with the exact same hardware internals, they will need to seek out additional FDA approval. (With previous approval and clinical trials to cite, the process is mostly a formality, but it’s still paperwork that takes more time and resources.)

The company also intends to release an over-the-counter version of the case. The good news is, this device will be eligible for coverage in most employee spending programs. But because of FDA regulations, this OTC version cannot provide the raw EKG data to a consumer who might not know how to interpret the esoteric waveforms. Instead, AliveCor will redesign the app to provide an infographic-esque interpretation of the EKG. “An EKG means something to a trained physician, but we can provide a lot of insights to an untrained consumer that might help explain what triggered a cardiac event,” Wade explains. “Like caffeine is a trigger. With an app, we see being able to offer more insight to an individual about their heart health.”

From a product design standpoint, this second-level data analysis sounds like an ideal, consumer-oriented decision. But from a consumer rights standpoint, why is any government agency standing in the way of consumer access to our own raw data? I can see how strongly my iPhone’s antenna is reaching the nearest cell tower, but I can’t see how well my own heart is ticking inside my body? How absurd is that? Interestingly enough, AliveCor is using this regulation to their advantage, banking on the health care model as it stands now. Its OTC device will offer services to refer you to a physician for deeper result analysis (and access to your actual waveforms, if you’re so concerned), which will provide a backend revenue stream beyond typical hardware sales. Imagine the potential: In-app purchase for a follow-up appointment.

An eagle at the Edinburgh Zoo that had been shot, but AliveCor’s case measured a heartbeat through its feathers. The eagle was deemed fit enough for surgery, underwent the procedure and lived. Needless to say, the device has veterinary applications as well.

THE FUTURE OF MEDICINE

For the time being, AliveCor is continuing to develop their EKG cases into a full line, including that OTC device, which will also be a universal version working for both iOS and Android. (Since the case actually communicates with the phone wirelessly, once the software programming is done, these product differentiations are largely cosmetic in nature.) No doubt, AliveCor sees the case as a stepping stone to the company’s overall vision, that “everyone should have their health at their fingertips,” Wade says. But the company will have to solve a lot of larger problems that the industry is struggling with to make that future a reality.

While diagnostic devices may be coming to the phone, we still have no standards to get such diagnostic information back to our doctors. AliveCor explained to me that it can send a push notification to my cardiologist every time I check my heart, but does my cardiologist really want push notifications all day from their client list? Or worse, would any doctor want a devastating cardiac episode just sitting under 30 other messages in the iOS Notification Center? Should my phone text or not text emergency information? Should doctors be held accountable for app-based information? Should medical devices be regulated to automatically dial 911 in cases of emergency?

No doubt, AliveCor’s Heart Monitor is another case of affordable consumer technology outpacing our brick-and-mortar hospitals, but to the credit of our hospitals, affordable consumer technology is outpacing most of the world. Still, just as Domino’s has figured out to deliver me a pizza through an app (no doubt, saving a few cents in the process), so, too, will the medical community come around to juggling big data at the individual patient level. The real question is, will FDA regulations leave space for the little guys--the weekend app warriors and the Kickstarters--to innovate responsibly, at a price cheaper than clinical trials and a timeframe faster than paperwork?

[Hat tip: Co.Exist]

Subscribe to:

Posts (Atom)

-

Anxiety about coronavirus can increase the risk of infection — but exercise can help Stress about the coronavirus ...

-

I have tried several meditation apps and online meditation programs. I started out reluctantly because I didn't think the electronic...

-

FEB. 2, 2023 MEET THE SCIENTISTS WHO WANT TO MAKE MEDICAL DEVICES WORK FOR EVERYONE, FINALLY In the early months of the pandemic, Ashr...